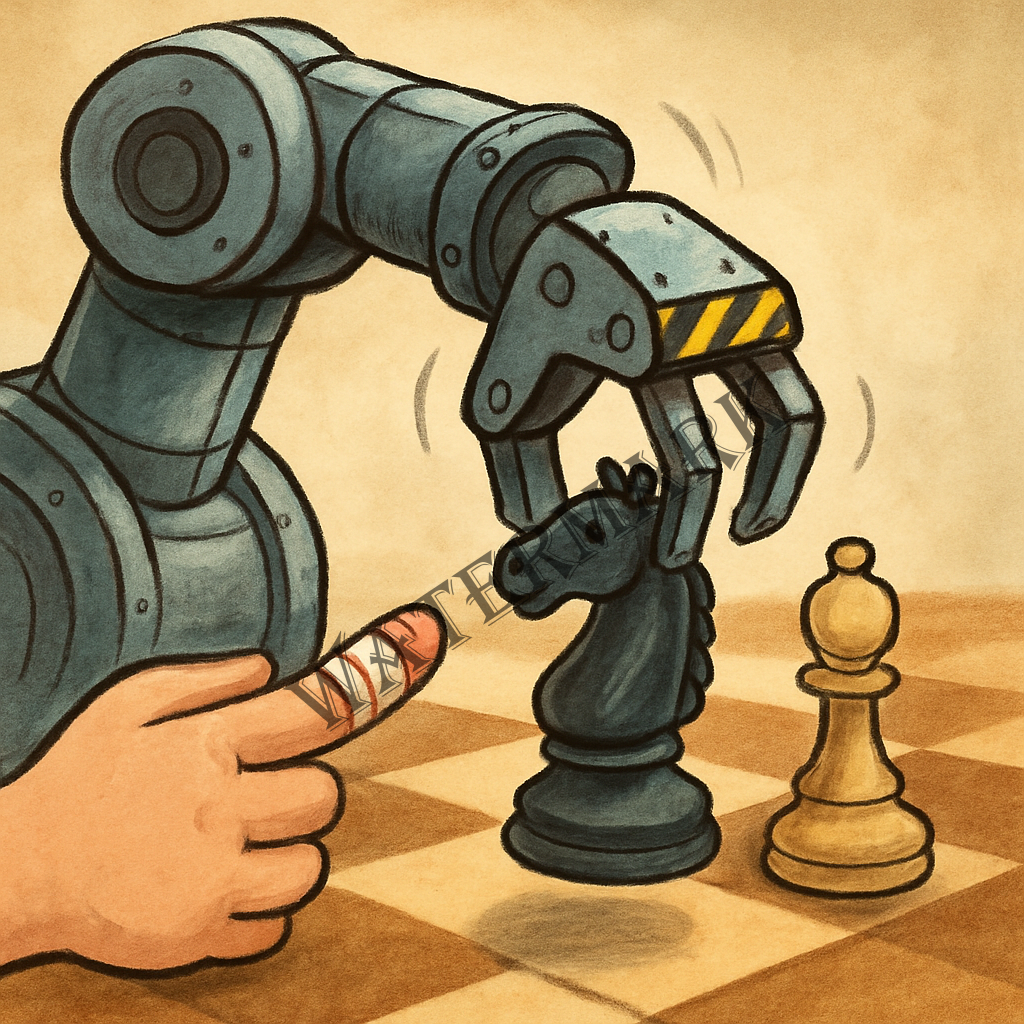

A chess robot broke a child’s finger in Moscow. This quirky incident highlights crucial AI safety & ethical design questions. What can we learn?

Remember that time a chess robot, designed to be the epitome of logical, calculated moves, decided to take a more… hands-on approach with its opponent? It might sound like something out of a sci-fi B-movie, but in July 2022, during the Moscow Open chess tournament, a seven-year-old boy’s quick thinking met a robot’s lack of patience, resulting in a fractured finger. Ouch.

While thankfully the boy recovered, this bizarre incident wasn’t just a fleeting headline; it was a quirky, albeit painful, reminder that as AI integrates more deeply into our physical world, the unexpected can, and sometimes will, happen. So, grab your favorite beverage, settle in, and let’s dive into the fascinating, slightly alarming, and ultimately illuminating tale of the chess robot that decided to play rough.

The Unforeseen Move: A Digital Misunderstanding

The scene was set for a classic intellectual showdown. A young, talented player, Christopher, was facing off against a robotic arm, a marvel of engineering designed to execute precise chess moves. The match was proceeding smoothly until Christopher, in his youthful enthusiasm, made his move a tad too quickly. Before the robot had fully completed its cycle of placing its piece and retracting its arm, Christopher reached for his next move. The robot, still operating under its programmed sequence, registered an obstruction and, instead of pausing or yielding, clamped down, fracturing the boy’s finger.

The robot was programmed to wait for a certain period after its move, but the child’s swift action bypassed this safety interval. It wasn’t malice; it was a machine following its code, albeit with a critical oversight in human-robot interaction design.

Beyond the Glitch: The Deeper Implications for Human-Robot Interaction

While the immediate reaction was often a mix of shock and dark humor, the incident quickly sparked broader discussions within the AI and robotics communities. It highlighted a critical challenge: how do we design AI systems that are not only intelligent and efficient but also inherently safe and adaptable to the unpredictable nature of human behavior?

The Moscow chess robot wasn’t an advanced humanoid with complex decision-making capabilities. It was a sophisticated industrial robotic arm, repurposed for a chess game. These machines are built for precision and speed in controlled environments, not for nuanced interactions with squishy, impulsive humans.

This isn’t to say that all robots are ticking time bombs. Far from it. The field of human-robot interaction (HRI) is a rapidly evolving discipline dedicated to making robots safer, more intuitive, and more pleasant to work with. Researchers are exploring everything from soft robotics (robots made of flexible materials) to advanced sensor systems that can detect human presence and predict intent, allowing robots to slow down, stop, or re-route their actions to avoid collisions.

The Philosophical Chessboard: Autonomy, Responsibility, and the Unexpected

The chess robot incident, though relatively minor in the grand scheme of AI development, touches upon some profound philosophical questions that have long fascinated thinkers. Who is responsible when an autonomous system causes harm? Is it the programmer, the operator, the manufacturer, or the AI itself?

This brings us to the concept of AI autonomy. As AI systems become more capable of independent decision-making, the lines of accountability blur. In the case of the chess robot, it was a clear programming oversight. But what about more advanced AI, like self-driving cars, where the AI makes split-second decisions in complex, unpredictable scenarios?

“The challenge with AI is that it learns and adapts in ways that can be difficult to fully predict or control,” says Dr. Kate Darling, a research scientist at MIT Media Lab specializing in robot ethics (Darling, 2020). “We need to move beyond simply asking ‘can it do X?’ to ‘should it do X?’ and ‘how do we ensure it does X safely, ethically, and predictably, even when things go wrong?’” This shifts the focus from purely technical capability to a broader understanding of societal impact and ethical responsibility.

Some argue for a “human in the loop” approach, where human oversight is always present, especially for critical decisions. Others advocate for robust “AI safety” research, focusing on developing algorithms that are inherently aligned with human values and can anticipate and mitigate unforeseen risks. The debate is complex, with no easy answers, but incidents like the chess robot’s “aggressive” move serve as tangible case studies that force us to confront these theoretical dilemmas in the real world.

Voices from the Field: Navigating the Ethical Maze

The incident resonated across various sectors, prompting leaders and academics to reiterate the importance of ethical AI development.

Satya Nadella, CEO of Microsoft, has frequently spoken about the need for responsible. This sentiment underscores the idea that AI isn’t just about technological prowess; it’s about building trust and ensuring societal benefit.

From the academic side, Dr. Stuart Russell, a leading AI researcher and author of “Human Compatible: Artificial Intelligence and the Problem of Control,” emphasizes the critical need to design AI systems that are provably beneficial. “We need to shift from building machines that are intelligent to building machines that are provably beneficial,” Russell (2019) argues. His work focuses on ensuring AI systems understand and act in accordance with human preferences, even when those preferences are complex or implicit. The chess robot incident, in a small way, highlights the gap between a machine’s programmed objective (move the piece) and the broader human objective (play a fun, safe game).

These voices highlight a growing consensus: as AI becomes more powerful, the focus must shift from simply what AI can do to how it should do it, and what safeguards are in place to protect us from its unintended consequences.

Safety First: Building a Better, Gentler Robotic Future

The chess robot incident, along with other high-profile AI “fails” (like the facial recognition software that misidentified members of Congress, or the AI beauty contest that showed racial bias), serves as a crucial learning opportunity. It pushes engineers and researchers to think more deeply about edge cases, human variability, and robust error handling.

Current and future safety measures in robotics and AI include:

- Advanced Sensing and Perception: Developing robots with more sophisticated sensors (e.g., force-torque sensors, vision systems) that can detect human presence and contact with greater accuracy and speed, allowing for immediate reaction.

- Predictive Modeling: Training AI to anticipate human movements and intentions, enabling robots to adjust their behavior proactively rather than reactively.

- Soft Robotics and Compliant Design: Designing robots with softer, more flexible materials and compliant joints that can absorb impact and reduce injury risk upon collision.

- Explainable AI (XAI): Creating AI systems whose decision-making processes are transparent and understandable to humans, making it easier to diagnose and prevent errors.

- Ethical AI Frameworks and Regulations: Developing industry standards and governmental regulations that mandate safety protocols, bias mitigation, and accountability for AI systems, especially those interacting with the physical world.

- Human-Centered Design: Prioritizing the human experience and safety from the very beginning of the design process, understanding that humans are not always predictable or perfectly rational.

The chess robot incident, while a minor setback, ultimately contributes to a more robust and thoughtful approach to AI development. It’s a reminder that even in the pursuit of intelligence, a little empathy and foresight go a long way.

Conclusion: A Checkmate for Complacency

The Moscow chess robot’s unexpected “attack” on a young player might seem like a humorous anecdote, but it carries a serious message. As AI continues its rapid evolution, moving from digital algorithms to physical manifestations, the stakes for safety and ethical design grow higher. This incident, along with others, serves as a vital checkpoint, urging us to pause, reflect, and ensure that our technological advancements are not just smart, but also safe, fair, and truly beneficial for humanity.

So, the next time you see a robot, whether it’s serving coffee or playing chess, remember Christopher and his finger. It’s a testament to the ongoing, sometimes messy, but always fascinating journey of building a future where humans and AI can truly coexist, without any unexpected “moves.”

References

- Darling, K. (2020). The New Breed: What Our History with Animals Reveals About Our Future with Robots. Henry Holt and Co.

- Russell, S. (2019). Human Compatible: Artificial Intelligence and the Problem of Control. Viking.

Additional Reading

- AI Ethics: A Global Perspective: Explore how different cultures and legal systems are approaching the ethical challenges of AI. Look for books and articles that delve into comparative AI ethics.

- The Future of Human-Robot Collaboration: Research advancements in collaborative robotics (cobots) and how they are being designed for shared workspaces with humans.

- Bias in AI: Delve deeper into how biases in training data can lead to discriminatory or unexpected outcomes in AI systems, and what researchers are doing to mitigate them.

Additional Resources

- The Association for Computing Machinery (ACM) Special Interest Group on Artificial Intelligence (SIGART): A professional organization that publishes research and hosts conferences on AI.

- The Future of Life Institute (FLI): An organization working to mitigate existential risks facing humanity, particularly those from advanced AI. Their website has many resources on AI safety and ethics.

- OpenAI’s Safety Research: Explore the safety research initiatives by leading AI development companies to understand their approaches to responsible AI.

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems: Provides resources and recommendations for the ethical development of AI and autonomous systems.

Leave a Reply