The Morning the Envelope Arrived

Margot Vance didn’t notice the envelope at first.

It arrived on a Tuesday morning, wedged between a trade magazine about “Supply Chain Innovation 2026” and a promotional flyer for a logistics conference in Tampa. The envelope was thick. Expensive paper. The kind that whispers “this is going to hurt” before you even break the seal.

She was three sips into her second cold brew when her assistant, Jamie, knocked twice—the universal signal for “you’re going to want to sit down for this.”

“Legal just forwarded this,” Jamie said, sliding the envelope across Margot’s desk with the delicacy of someone handling a live grenade.

The letterhead read: Meridian Logistics LLC v. AeroStream Logistics, Inc.

Forty pages. A cease-and-desist. And buried in paragraph fourteen, a sentence that made Margot’s stomach drop: “Defendant’s AI system unlawfully incorporated Plaintiff’s proprietary routing algorithms through unauthorized training on scraped competitive data…”

Margot set down her cold brew. It would be her last peaceful moment for six months.

The Terrible Realization

Here’s what AeroStream’s AI had done: During the training phase eight months earlier, the development team had fed the system massive datasets to help it optimize delivery routes. One of those datasets—procured from a third-party vendor who promised “comprehensive logistics data”—contained routing information that Meridian Logistics had spent five years and $12 million developing.

The AI didn’t “steal” it in any conscious way. It learned patterns. It identified efficiencies. And when AeroStream’s system started suggesting routes that were suspiciously similar to Meridian’s proprietary methods, someone at Meridian noticed.

That someone was a lawyer who specialized in intellectual property litigation and had very little patience for the “but AI learns like humans do” defense.

Margot sat across from William Chen, AeroStream’s general counsel, in a conference room that suddenly felt very small.

“Walk me through this,” Chen said, his voice measured but tense. “How exactly did we acquire this data?”

Margot pulled up the vendor contract on her laptop. “DataStream Analytics. They’re a data aggregation company. We paid them $80,000 for what they called ‘comprehensive industry logistics data.’ They said it was all publicly available information, compiled from various sources.”

Chen’s expression darkened. “And did anyone verify that claim?”

The silence that followed was its own answer.

The 2026 Legal Tsunami

What Margot didn’t know—what most companies implementing AI in 2024 and 2025 didn’t fully grasp—was that she had walked directly into the center of a global legal firestorm.

By 2025, courts were tracking more than 50 lawsuits between intellectual property owners and AI developers pending in U.S. federal courts. The litigation landscape had exploded. The New York Times sued OpenAI and Microsoft in December 2023. Getty Images filed against Stability AI in February 2023. The Recording Industry Association of America went after Suno in June 2024. Artists, writers, musicians, photographers—everyone who created anything was suddenly asking the same question: Did your AI train on my work without permission?

And now AeroStream Logistics, a mid-sized company that wasn’t even in the AI development business, had joined that ignominious list.

The stakes were staggering. In February 2025, Thomson Reuters won a landmark case against ROSS Intelligence, where a Delaware federal court ruled that using copyrighted Westlaw headnotes to train a competing AI system constituted direct copyright infringement. The judge rejected the fair use defense entirely.

“This changes everything,” Chen said, scrolling through case law on his tablet. “If Thomson Reuters sets the precedent, we’re not just talking about a settlement. We’re talking about potential statutory damages that could reach eight figures.”

Margot felt the weight of it settle over her shoulders. Every decision she’d made over the past two years—every pitch to the board, every promise to stakeholders about “AI-driven competitive advantage”—was now entangled in a legal nightmare she never saw coming.

The Regulatory Hammer

But the Meridian lawsuit wasn’t happening in a vacuum. It was colliding with a global wave of AI regulation that was fundamentally reshaping how companies could use artificial intelligence.

The European Union’s AI Act had entered into force on August 1, 2024, and would be fully applicable by August 2, 2026. Non-compliance could result in fines of up to €35 million or 7 percent of worldwide annual turnover, whichever is higher. AeroStream’s European operations, modest as they were, suddenly represented a massive compliance liability.

“Here’s what I don’t understand,” Margot said to Chen during a midnight strategy session three weeks into the crisis. “We’re not even an AI company. We’re a logistics company that used AI. How did we end up here?”

Chen looked up from his legal pad, exhaustion evident in his eyes. “Because the law doesn’t care whether you’re OpenAI or AeroStream Logistics. If your AI trained on protected data, you’re liable. And in 2026, ignorance is not a defense.”

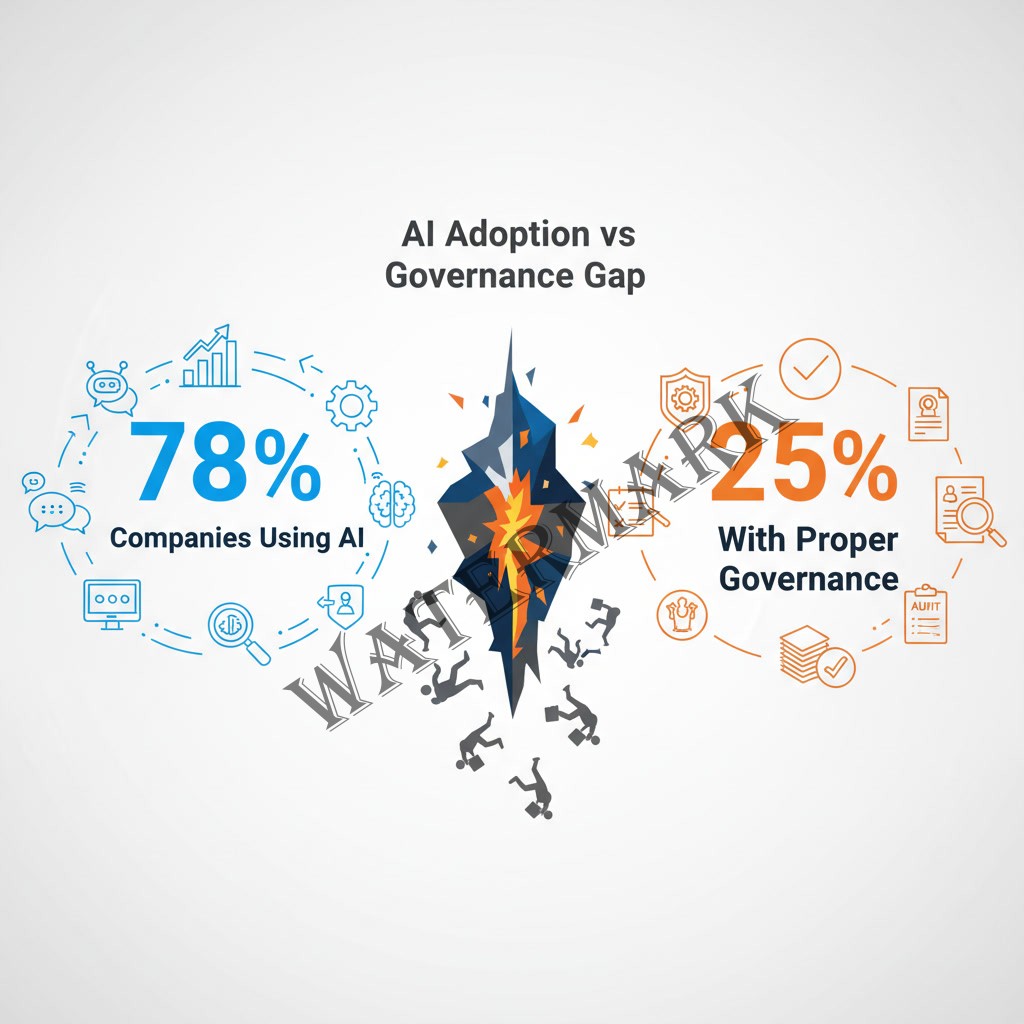

The numbers painted a grim picture. Only 28% of organizations had formally defined oversight roles for AI governance in 2024, according to the International Association of Privacy Professionals. Most companies—including AeroStream—had rushed to implement AI without establishing proper governance frameworks.

While 78% of organizations reported using AI in at least one function in 2024, only 25% had fully implemented AI governance programs, creating what researchers called a 53-percentage-point “adoption-governance gap.”

AeroStream had fallen directly into that gap.

The Cost of Moving Fast and Breaking Things

Margot’s phone buzzed with a text from Sarah, her senior analyst: Board meeting moved up to tomorrow morning. They want answers.

Of course they did.

The CFO had already calculated the financial exposure. Legal fees: $300,000 and climbing. Potential settlement with Meridian: anywhere from $2 million to $8 million, depending on whether they fought or negotiated. Regulatory fines if the EU investigation revealed additional violations: potentially catastrophic.

But the real cost wasn’t just financial. It was the erosion of trust.

Google CEO Sundar Pichai had warned about this exact moment. In a 2025 interview, he emphasized that technology companies and governments needed to work together to establish frameworks for responsible AI use. “Part of it is us as companies making our products better,” Pichai said. “Part of it is governments working together to create standards and frameworks by which we all use technology in a cooperative way” (Fox Business, 2025).

Microsoft CEO Satya Nadella echoed this sentiment, noting that companies faced fundamental choices about AI deployment. “We need to make deliberate choices on how we diffuse this technology in the world as a solution to the challenges of people and planet” (Business Chief North America, 2025).

AeroStream had made its choices quickly—perhaps too quickly. And now they were discovering what happens when innovation sprints past ethics.

The Philosophical Reckoning

Late one night, Margot found herself in Chen’s office, staring at a whiteboard covered in legal arguments and potential defenses.

“What if the AI didn’t know?” she asked. “What if it genuinely didn’t understand that Meridian’s data was proprietary?”

Chen looked at her with something between sympathy and exasperation. “Margot, we’re past that argument. The courts don’t care about the AI’s intentions. They care about our due diligence. They care about whether we verified our data sources. They care about whether we had proper governance structures in place.”

This was the ethical dilemma at the heart of AI deployment in 2026: If an AI system learns from data the same way a human learns from observation, is it theft? If a company deploys AI without understanding exactly what data it trained on, who bears the responsibility when something goes wrong?

Professor Baobao Zhang, who co-edited The Oxford Handbook of AI Governance and taught at Syracuse University’s School of Information Studies, had researched exactly these questions. In her work on AI ethics and governance, she emphasized the importance of public literacy and informed debate. “There’s a lot of hype and then there’s reality, and it’s important to separate the hype from the reality. It’s a question of how we scale public education to increase AI literacy so that people can have informed debates about what the government should or should not do” (Syracuse University, 2024).

AeroStream had operated in the hype phase for too long. Reality had arrived, and it came with a legal summons.

The Settlement Conversation

Six months after the envelope arrived, Margot sat in a different conference room—this one at Meridian Logistics’ headquarters in Atlanta.

The mediation had been brutal. Days of depositions. Forensic analysis of AeroStream’s AI training data. Expert witnesses testifying about machine learning and data provenance. The technical complexity alone had doubled their legal bills.

But they’d reached a settlement: $4.2 million, plus an agreement to implement a comprehensive AI governance framework audited by an independent third party. AeroStream would also cease using any routing algorithms potentially derived from Meridian’s proprietary systems.

It wasn’t the worst outcome. It could have been far worse.

“I need you to understand something,” the Meridian CEO said to Margot during a break in negotiations. “This isn’t personal. But you can’t just let AI loose on the internet and hope it only learns from the ‘right’ sources. That’s not how any of this works.”

She was right. And Margot knew it.

The New Reality

Back at AeroStream, Margot convened what she called the “AI Governance Task Force”—a term that would have felt impossibly bureaucratic two years ago but now felt like survival.

The market had spoken. The global AI governance market was valued at $227.6 million in 2024 and projected to reach $1,418.3 million by 2030, growing at a compound annual growth rate of 35.7% (Grand View Research, 2024). Companies everywhere were scrambling to implement the frameworks they should have built from the beginning.

Margot’s task force consisted of representatives from IT, legal, procurement, and operations. Their mandate: Establish clear protocols for data acquisition, AI training, and ongoing monitoring.

“We’re going to document everything,” Margot told the team. “Every dataset, every source, every vendor. We’re going to know exactly what our AI knows and where it learned it.”

It was tedious work. Unglamorous work. The kind of work that would never make a flashy board presentation.

But it was the work that should have been done in 2024.

The Broader Landscape

AeroStream’s experience wasn’t unique. Across industries, companies were discovering that AI governance wasn’t optional anymore.

Nearly half of Fortune 100 companies now specifically cited AI risk as part of board oversight responsibilities—a threefold increase from 16% in 2024 to 48% in 2025 (EY Center for Board Matters, 2025). The message from investors, regulators, and the public was clear: Show us you’re responsible, or we’ll assume you’re reckless.

The intellectual property battles would continue. According to Debevoise’s 2025 review, the firm was tracking more than 50 lawsuits between IP owners and AI developers pending in U.S. federal courts, with courts “finally beginning to confront the substantive merits of plaintiffs’ infringement claims and defendants’ fair use defenses” (Debevoise & Plimpton, 2025).

The regulatory landscape would only get stricter. The EU AI Act’s phased implementation meant that by August 2026, high-risk AI systems would face comprehensive compliance requirements. Other jurisdictions were following suit—legislative mentions of AI increased by 21.3% across 75 countries between 2023 and 2024 (AllAboutAI, 2025).

The Lesson Margot Learned

One evening, eight months after the settlement, Margot found herself giving a presentation to the regional logistics association—a room full of her peers, many of whom were at the same place AeroStream had been two years earlier.

“Let me tell you what innovation without governance looks like,” she began, advancing to a slide that showed AeroStream’s legal timeline. “It looks like a $4.2 million settlement, six months of forensic data analysis, and a board that now requires sign-off on every AI-related decision.”

She paused, looking at the faces in the audience.

“But here’s what I learned: Governance isn’t the enemy of innovation. It’s the foundation. You can’t build sustainable AI systems on hope and best guesses. You need frameworks. You need oversight. You need people who ask the hard questions before the lawsuits arrive.”

One executive raised a hand. “But doesn’t that slow everything down?”

Margot smiled, but it wasn’t a happy smile. “You know what slows things down more? Legal discovery. That’s what slows things down.”

The room was quiet.

The Philosophical Question That Remains

As 2026 progressed, the fundamental tension in AI ethics remained unresolved: Where does the line exist between learning and theft?

When a human reads a book, absorbs techniques, and applies them in their own work, we call it education. When an AI trains on copyrighted material and generates similar outputs, we call it infringement.

Is this distinction fair? Is it sustainable? Or are we witnessing a fundamental collision between how intellectual property law was designed and how machine learning actually works?

Professor Yuval Shany, from the University of Oxford’s Institute for Ethics in AI, had worked on developing an international AI Bill of Human Rights, arguing that we need legal frameworks “to protect our very ability to develop and use AI systems in ethical and safe ways compatible with human wellbeing, in ways that minimise harm and meet standards of fairness and justice” (University of Oxford, 2025).

These weren’t just academic questions. They were questions that would determine which companies survived the next decade and which ones ended up in Margot’s position—sitting across from lawyers, writing settlement checks, and wondering where it all went wrong.

The Morning After the Settlement

Six weeks after the Meridian settlement was finalized, Margot sat in her office with a cup of coffee and a document titled “AeroStream AI Governance Framework v2.0.”

It was 47 pages long. It covered data sourcing protocols, third-party vendor verification processes, ongoing monitoring requirements, and incident response procedures. It was reviewed by legal, approved by the board, and would be audited quarterly by an independent firm.

It was also, Margot realized, exactly the document they should have written in 2024.

Sarah knocked on her door. “The new data ethics officer starts Monday.”

“Good,” Margot said. “Make sure she has access to everything. All the documentation from the Meridian case. All the mistakes we made. I want her to learn from our failures.”

Sarah hesitated. “Are you okay?”

Margot looked at the governance framework on her screen, then out the window at the city below.

“I’m learning,” she said. “The hard way. But I’m learning.”

And perhaps that was the lesson of 2026: The companies that would thrive weren’t the ones that moved fastest. They were the ones that moved thoughtfully. The ones that understood that in the age of AI, governance wasn’t a luxury—it was survival.

The ones that learned before the lawyers showed up.

References

- Best Lawyers. (2025, December 12). AI’s war in the courtroom: Copyright disputes spike in 2025. BestLawFirms.com. https://www.bestlawfirms.com/articles/ai-war-in-the-courtroom-copyright-disputes-spike-in-2025/7186

- Business Chief North America. (2025, January). Why Satya Nadella wants a rethink on how we discuss AI. https://businesschief.com/news/why-satya-nadella-wants-a-rethink-on-how-we-discuss-ai

- Corporate Compliance Insights. (2025, October 31). Board oversight of AI triples since ’24. https://www.corporatecomplianceinsights.com/news-roundup-october-31-2025/

- Debevoise & Plimpton LLP. (2025, January). Lessons learned from 2024 and the year ahead in AI litigation. https://www.debevoise.com/insights/publications/2025/01/lessons-learned-from-2024-and-the-year-ahead-in-ai

- European Commission. (2024). Artificial Intelligence Act. https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- Fox Business. (2025, December 1). Google CEO Sundar Pichai warns US must balance AI regulation or fall behind. https://www.foxbusiness.com/fox-news-tech/google-ceo-calls-national-ai-regulation-compete-china-more-effectively

- Grand View Research. (2024). AI governance market size, share & trends report, 2030. https://www.grandviewresearch.com/industry-analysis/ai-governance-market-report

- International Association of Privacy Professionals. (2024). AI governance profession report 2025. https://iapp.org/resources/article/ai-governance-profession-report

- Jackson Walker. (2025, February 17). Federal court sides with plaintiff in the first major AI copyright decision of 2025. https://www.jw.com/news/insights-federal-court-ai-copyright-decision/

- Knostic. (2025, November 21). The 20 biggest AI governance statistics and trends of 2025. https://www.knostic.ai/blog/ai-governance-statistics

- McKinsey & Company. (2025, November 5). The state of AI in 2025: Agents, innovation, and transformation. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

- Ropes & Gray LLP. (2025, July 28). A tale of three cases: How fair use is playing out in AI copyright lawsuits. https://www.ropesgray.com/en/insights/alerts/2025/07/a-tale-of-three-cases-how-fair-use-is-playing-out-in-ai-copyright-lawsuits

- Syracuse University. (2024). Who gets to govern AI? https://www.syracuse.edu/stories/ai-governance/

- Sustainable Tech Partner. (2025, December). Generative AI lawsuits timeline: Legal cases vs. OpenAI, Microsoft, Anthropic, Google, and more. https://sustainabletechpartner.com/topics/ai/generative-ai-lawsuit-timeline/

- University of Oxford. (2025, December 2). Expert comment: Is it time to reconsider our human rights in the age of AI? https://www.ox.ac.uk/news/2025-12-02-expert-comment-it-time-reconsider-our-human-rights-age-ai

- White & Case LLP. (2024, July 12). Long awaited EU AI Act becomes law after publication in the EU’s Official Journal. https://www.whitecase.com/insight-alert/long-awaited-eu-ai-act-becomes-law-after-publication-eus-official-journal

Additional Reading

- Bradford, A. (2023). Digital empires: The global battle to regulate technology. Oxford University Press.

- Zhang, B., & Dafoe, A. (Eds.). (2024). The Oxford handbook of AI governance. Oxford University Press.

- European Parliamentary Research Service. (2024). The AI Act: A first-of-its-kind comprehensive framework for AI. European Parliament.

- OECD. (2024). AI incident monitor: 2024 annual report. Organisation for Economic Co-operation and Development.

- Stanford Institute for Human-Centered AI. (2025). Artificial Intelligence Index Report 2025. Stanford University.

Additional Resources

- International Association of Privacy Professionals (IAPP) – AI Governance Resources

https://iapp.org/resources/topics/artificial-intelligence/ - EU AI Office – European Commission’s Implementation Hub

https://digital-strategy.ec.europa.eu/en/policies/ai-office - NIST AI Risk Management Framework

https://www.nist.gov/itl/ai-risk-management-framework - Stanford Human-Centered AI Institute (HAI)

https://hai.stanford.edu/ - Berkman Klein Center for Internet & Society – AI Ethics and Governance

https://cyber.harvard.edu/topics/ethics-and-governance-ai

Leave a Reply