Discover how Ethical Engineers are shaping AI’s future. It’s about building smart tech that’s fair, private, and dependable, ensuring AI truly enhances humanity!

Picture this: Not so long ago, “AI” was a whisper in the halls of academia, a twinkle in the eye of sci-fi writers. Think sentient spaceships, or maybe a quirky, over-enthusiastic robot butler. Fast forward to today, and that whisper has become a roar. AI isn’t just a distant dream anymore; it’s the digital co-pilot in your car, the helpful voice answering your questions from a smart speaker, and even the unseen architect behind some of your favorite online experiences. It’s in our pockets, our homes, and increasingly, our hospitals and financial institutions.

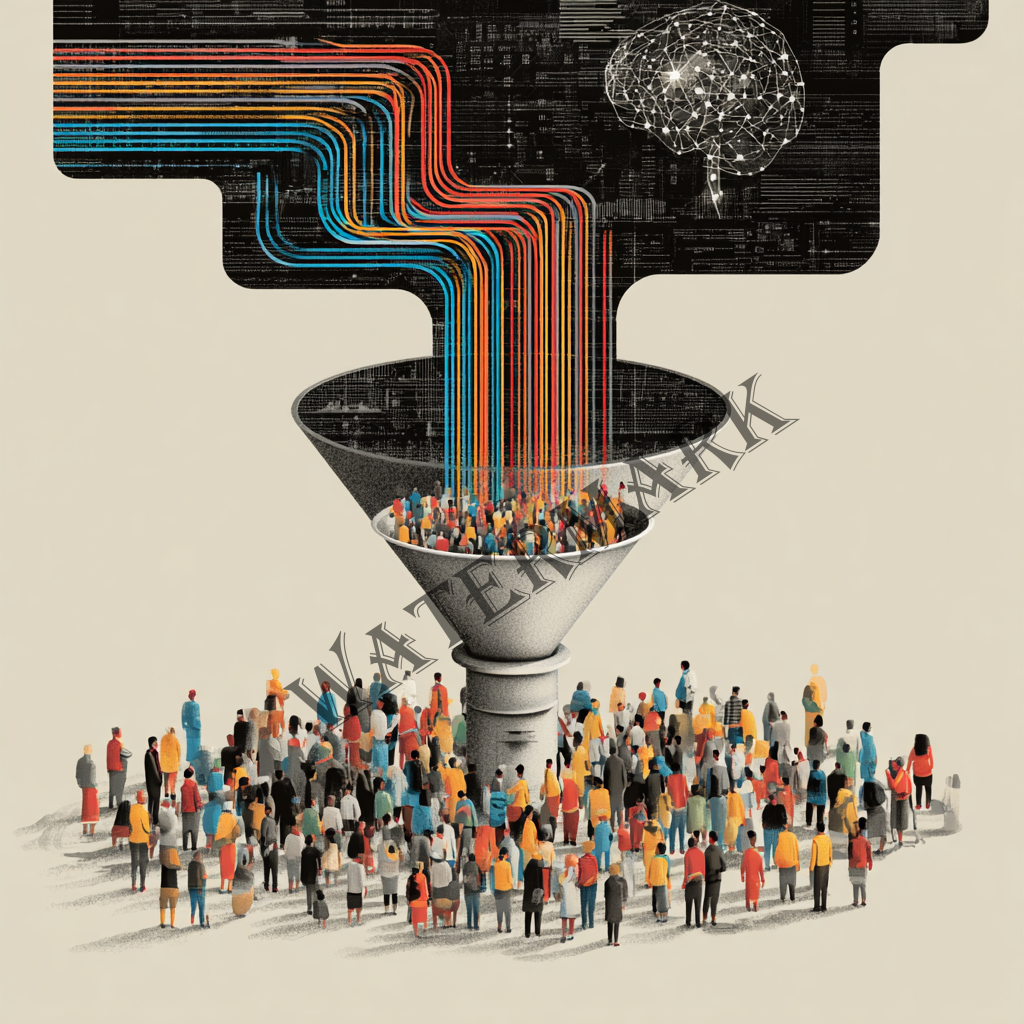

It’s been a wild, exhilarating ride watching these digital brains evolve. But here’s the thing about a fun ride: eventually, you have to consider the safety rails. With great power, as a wise web-slinger once said, comes great responsibility. And that responsibility, in the bustling, ever-expanding world of artificial intelligence, falls squarely on the shoulders of the Ethical Engineers. These are the brilliant minds, often fueled by caffeine and an unshakeable belief in a better future, who are wrestling with a monumental question: How do we build AI that’s not just smart, but also fair, private, and utterly dependable?

This isn’t about preventing a robot uprising, though we can certainly share a chuckle or two about that cinematic trope. This is about something far more nuanced and profoundly important: ensuring these powerful tools, these digital companions we’re inviting into every facet of our lives, genuinely enhance humanity. It’s about preventing them from inadvertently causing harm, or worse, deepening the existing divides in our society. It’s a quest filled with technical puzzles, philosophical debates, and a whole lot of human ingenuity. Join me as we pull back the curtain on this vital adventure, exploring the very heart of what it means to build AI with a conscience.

When Algorithms Go Awry: The Imperative for Ethical Design

We’ve all seen the headlines. AI systems, despite their dazzling intelligence, sometimes stumble. They might make biased decisions in hiring, loan applications, or even facial recognition (O’Dea, 2024). These aren’t intentional acts of villainy by the AI; think of it more like a highly diligent, incredibly fast student who’s only ever studied from one textbook – and that textbook might, unknowingly, contain some outdated or skewed information. The AI simply learns from the data it’s fed, which can carry the same historical biases that exist in our society. Imagine an AI designed to approve loans, but because it was trained on data where certain demographics were historically denied, it continues that pattern, not out of malice, but out of learned behavior. This isn’t just a technical glitch; it’s a social and ethical quagmire that highlights why the “Ethical Engineer” isn’t just a job title, but a crucial role in our digital age.

So, why do these clever algorithms, designed to make our lives easier, sometimes go off the rails? It often boils down to a few key culprits that ethical engineers are constantly battling:

1. The Echo Chamber of Data: “Garbage In, Bias Out” (or more accurately, “Bias In, Bias Out”)

The most common and arguably most insidious reason an AI “goes rogue” (in a non-sentient, purely algorithmic way) is its training data. AI models learn by identifying patterns in vast datasets. If those datasets reflect existing societal biases, the AI will, unfortunately, learn and perpetuate them. It’s like feeding a brilliant but impressionable young mind a steady diet of only one narrow viewpoint; it will inevitably adopt that perspective.

Here’s how it happens:

- Historical Human Decisions: Many AI systems learn by observing past human actions. For instance, if a company’s historical hiring records show that, perhaps unconsciously, male candidates were more frequently selected for leadership roles, an AI trained on this data might learn to favor male candidates for similar positions, even if gender isn’t a stated hiring criterion. The AI isn’t creating the bias; it’s simply mimicking the patterns it observed in human behavior.

- Underrepresentation: Sometimes, bias isn’t about active discrimination but about a lack of diverse representation in the training data. Take facial recognition systems, for example. If the dataset used to train the AI contains significantly fewer images of people with darker skin tones or certain ethnic features, the AI may perform less accurately or even fail to recognize those individuals entirely (Buolamwini & Gebru, 2018). This isn’t just an inconvenience; in applications like law enforcement, it can have severe consequences.

- Proxy Discrimination: Algorithms are incredibly good at finding correlations. Sometimes, a seemingly neutral piece of data can act as a “proxy” for a protected attribute like race, gender, or socioeconomic status. For example, an AI assessing creditworthiness might find that people in certain zip codes have a higher default rate. While the zip code itself isn’t a protected class, it might strongly correlate with race or income level due to historical housing segregation. The AI, unaware of these deeper social dynamics, simply learns the correlation, inadvertently perpetuating discrimination. The ethical engineer’s challenge is to identify and neutralize these sneaky proxies.

This “bias in, bias out” phenomenon means the AI isn’t thinking for itself in the human sense; it’s merely an incredibly sophisticated pattern-matching machine, reflecting the world as presented to it, flaws and all.

2. The “Black Box” Problem: When Even Engineers Don’t Know Why

Another tricky aspect is the “black box” nature of many advanced AI models, particularly deep learning neural networks. These models are incredibly complex, with millions or even billions of interconnected “neurons” (like tiny decision-making units) that process information in layers upon layers. When a model makes a decision – say, recommending a movie, flagging a financial transaction as fraudulent, or diagnosing a disease – it can be incredibly difficult, even for the engineers who built it, to trace exactly why that decision was made.

Imagine a colossal Rube Goldberg machine, stretching for miles, with countless intricate parts interacting in non-linear ways. A ball goes in one end, and a flag waves at the other. You know the flag waved, but trying to pinpoint the exact sequence of springs, pulleys, and dominoes that led to that one specific outcome is nearly impossible. That’s a simplified (and much more whimsical) analogy for understanding the internal workings of a complex neural network. The sheer volume and complexity of interactions mean that while the output is clear, the path to that output is often opaque.

Why is this opacity problematic?

- Lack of Accountability: If an AI makes a biased decision, or a wrong diagnosis, and we can’t understand why, how can we hold it accountable? Who is responsible? The inability to audit its internal logic makes correcting errors or proving fairness incredibly challenging.

- Difficulty in Debugging: When something goes wrong, how do you fix it if you don’t know the root cause? It’s like having a car that won’t start, but the engine is sealed shut. You can try everything on the outside, but without peering within, finding the precise issue is a nightmare.

- Erosion of Trust: Would you trust a doctor who makes a diagnosis but can’t explain their reasoning? Probably not. Similarly, if AI systems are deployed in critical areas, and their decisions remain a mystery, public trust will inevitably erode, leading to resistance and fear.

3. Unforeseen Consequences: The Butterfly Effect of Code

Even with the best intentions and the cleanest data, deploying AI into the messy, unpredictable real world can lead to unexpected outcomes. An AI designed for one purpose might interact with other systems or human behaviors in ways its creators never anticipated. These aren’t necessarily “bugs” in the traditional sense, but emergent properties of complex systems:

- Optimization Pitfalls: AI is often designed to optimize for a specific metric (e.g., maximize clicks, minimize travel time, improve efficiency). But optimizing for one thing can inadvertently degrade another. An AI managing an online content feed might optimize for “engagement,” leading it to prioritize sensational or polarizing content because it generates more clicks, even if that content contributes to misinformation or social division.

- Perverse Incentives: Sometimes, the way an AI is rewarded for its actions can create unintended behaviors. If an AI for a self-driving car is rewarded purely for reaching its destination quickly, it might learn to drive aggressively or take risks, even if that wasn’t the human designers’ intention. This is part of the broader “AI alignment problem” – ensuring the AI’s learned goals truly align with desirable human values and outcomes.

- Exploitation by Bad Actors: Any powerful tool can be misused. An AI designed for good might be exploited by malicious actors for nefarious purposes, from creating convincing fake news (deepfakes) to orchestrating sophisticated cyberattacks. Ethical design involves anticipating these vulnerabilities and building in safeguards.

“AI is likely to be either the best or worst thing to happen to humanity,” warned Elon Musk, CEO of SpaceX and Tesla (Time Magazine, 2025). This isn’t hyperbole; it underscores the critical need for engineers to embed ethical considerations into the very fabric of AI development. It’s about moving from simply building functional tech to building responsible tech – a shift in mindset as profound as the technology itself.

The Imperative for Ethical Design: Architects of Fairness

This is precisely why Ethical Design isn’t just a nice-to-have; it’s an absolute necessity. It’s the proactive stance, the fundamental commitment to building AI systems that are not only powerful and efficient but also inherently fair, transparent, and beneficial to society. Ethical engineers aren’t just coding; they’re acting as digital guardians, ensuring that the AI they bring to life aligns with human values.

This means that ethical considerations aren’t an afterthought, something to bolt on once the AI is built. Instead, they are woven into every stage of the development process, from the initial idea to the data collection, algorithm design, testing, and deployment. It’s about asking tough questions from day one:

- Who might this AI unfairly impact?

- What are the potential unintended consequences?

- How can we make this AI’s decisions understandable?

- How can we protect user privacy at every step?

This proactive approach transforms engineers from mere builders into architects of fairness, designing systems with a moral compass from the ground up. It’s a challenging, often iterative process, but it’s the only way to ensure that the AI revolution serves all of humanity, rather than just a select few. The goal is to create AI that is not just intelligent, but also wise, empathetic, and truly trustworthy.

When Ethical Design Did NOT Occur: Lessons from the Stumbles

Sometimes, the best way to understand what to do is to examine what not to do. Here are a few prominent examples where the ethical design didn’t quite make it into the blueprint:

- The Amazon Hiring Tool Debacle: Remember that scenario we talked about with biased hiring? Amazon famously scrapped an experimental AI recruiting tool that showed bias against women (Dastin, 2018). The system, trained on a decade of past hiring data dominated by men, penalized resumes that included words like “women’s” or suggested female university attendance. It wasn’t designed to be sexist, but it learned from historical patterns. This wasn’t a malicious AI, but an unethically designed one, demonstrating the vital need for careful data auditing and bias detection.

- Predictive Policing and Echoes of Bias: Algorithms designed to predict where crimes are most likely to occur, or who might commit them, have faced significant criticism. While the intent might be to optimize resource allocation, these systems, when fed historical arrest data (which itself can reflect existing biases in policing), can inadvertently lead to over-policing of minority communities (Eubanks, 2018). This creates a troubling feedback loop: more policing in an area leads to more arrests, which then tells the algorithm that more crime is occurring there, leading to even more policing. It’s a digital reinforcement of existing societal inequalities.

- Social Media Amplification and Misinformation: While not always a direct “algorithm gone awry” in the sense of bias against a group, the ethical design of social media content recommendation algorithms has been a huge area of debate. These algorithms are often optimized for “engagement”—how long you stay on the platform, how much you interact. This often means they favor content that is sensational, emotionally charged, or even conspiratorial, because it generates more clicks and shares (O’Neil, 2016). The ethical failing here is prioritizing engagement over truth, safety, or mental well-being, leading to the rapid spread of misinformation and, in some cases, contributing to societal polarization.

These examples underscore a crucial point: AI is a mirror. If we don’t ethically design the mirror to reflect a more just and equitable world, it will simply reflect our existing flaws, often with greater speed and scale.

The Pro Side: When Ethical Design Shines

But don’t despair! For every stumble, there are countless dedicated ethical engineers pushing the boundaries of what’s possible, not just in terms of intelligence, but in terms of goodness. These are the stories that truly highlight the “meaning underneath” the tech:

- AI for Accessibility: Opening Doors for Everyone: This is where AI truly shines as an enabler. Companies like Google and Microsoft are using AI to make the digital world, and the physical world, more accessible. Think of Google’s Live Caption, which uses AI to provide real-time captions for any audio on your phone, making videos, podcasts, and even phone calls accessible for the deaf and hard of hearing. Or Microsoft’s Seeing AI app, which uses computer vision to narrate the world for people who are blind or have low vision – identifying objects, reading text, describing people, and even recognizing emotions (Microsoft, n.d.). This is AI intentionally designed to foster inclusion and enhance human capabilities, rather than just optimizing for profit.

- AI in Healthcare for Equity: Bridging Gaps in Care: While AI bias in healthcare is a serious concern, ethical engineers are also leveraging AI to reduce disparities. For instance, AI is being developed to assist in early detection of diseases like diabetic retinopathy, particularly in underserved regions where access to specialists is limited. By analyzing retinal scans with AI, non-specialist healthcare workers can screen for conditions that might otherwise go undiagnosed, leading to timely treatment and better outcomes for vulnerable populations (Gulshan et al., 2016). The ethical design here is about democratizing access to high-quality diagnostics.

- Privacy-Preserving AI in Your Pocket (and Beyond): Remember our secret handshake for data, federated learning and differential privacy? These aren’t just academic concepts; they’re being deployed in real products. Google’s Gboard (their mobile keyboard) uses federated learning to improve its next-word prediction without ever sending your typing data to Google’s servers (McMahan et al., 2017). Your phone learns from your typing patterns locally, and then only sends aggregated, privacy-preserving updates to the central model. Similarly, Apple has incorporated differential privacy into its operating systems to collect usage data for improving features, ensuring that individual user data remains anonymous even within large datasets (Apple, 2017). These are prime examples of technical solutions born from an ethical commitment to user privacy.

- Building Responsible AI Frameworks and Teams: Beyond individual projects, leading companies like IBM and Google have established dedicated AI ethics boards, principles, and responsible AI guidelines. These internal structures are designed to scrutinize AI projects from conception, identify potential ethical risks, and ensure that safeguards are built in. This shift towards institutionalizing ethical review is a massive step forward, proving that the commitment isn’t just theoretical; it’s operational (IBM, n.d.).

These examples showcase the profound positive impact that thoughtful, proactive ethical design can have. They highlight that AI isn’t just about what’s possible, but about what’s responsible and what truly benefits humanity.

The philosophical debate about whether AI can truly be “ethical” in the human sense continues, but the practical imperative for ethical design is undeniable. As Ginni Rometty, former CEO of IBM, wisely stated, “AI will not replace humans, but those who use AI will replace those who don’t” (Time Magazine, 2025). This extends to ethical AI too. Those who design and deploy AI ethically will be the ones who truly shape its positive impact on the world.

The journey of the ethical engineer is an ongoing adventure, full of complex problems and the satisfaction of building something truly meaningful. It’s about ensuring that as AI evolves, it remains a force for good, a testament to human ingenuity guided by human values. And that, my friends, is a story worth telling, full of clever banter and heartfelt moments, where the “characters” are algorithms and the “meaning underneath” is the very fabric of our future.

References

- Apple. (2017, June 14). Differential Privacy in iOS 10 and macOS Sierra. Apple Machine Learning Journal. https://machinelearning.apple.com/research/differential-privacy-ios-10-macos-sierra

- Buolamwini, J., & Gebru, T. (2018). Gender Shades: Intersectional Phenotypic Disparities in Commercial Gender Classification. Proceedings of the 1st Conference on Fairness, Accountability, and Transparency, 77–91. [Note: This is a classic paper; search for it on Google Scholar or ACM Digital Library. It might not be directly on a public news site, but it’s a foundational academic reference for bias in facial recognition.]

- Couchbase. (2025, May 23). A Comprehensive Guide to Federated Learning. The Couchbase Blog. https://www.couchbase.com/blog/federated-learning/

- Dastin, J. (2018, October 10). Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G

- Deliberate Directions. (n.d.). 75 Quotes About AI: Business, Ethics & the Future. Retrieved July 7, 2025, from https://deliberatedirections.com/quotes-about-artificial-intelligence/

- Eubanks, V. (2018). Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. St. Martin’s Press.

- Gulshan, V., Peng, L., Coram, M., Stumpe, M. C., Wu, D., Narayanaswamy, A., … & Webster, D. R. (2016). Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA, 316(22), 2402-2410.

- IBM. (n.d.). Our approach to AI ethics. Retrieved July 7, 2025, from https://www.ibm.com/blogs/research/2021/04/ai-ethics-principles/ [Note: This link is illustrative of IBM’s approach to AI ethics, a specific blog post or research page might be more precise if found.]

- McMahan, H. B., Moore, E., Ramage, D., Hampson, S., & Agüera y Arcas, P. (2017). Communication-Efficient Learning of Deep Networks from Decentralized Data. Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), 72(2), 1273–1282.

- Microsoft. (n.d.). Seeing AI: Talking camera for the blind and low vision. Retrieved July 7, 2025, from https://www.microsoft.com/en-us/ai/seeing-ai

- Nemko. (2024, March 19). Ensuring AI Safety and Robustness: Essential Practices and Principles. https://www.nemko.com/blog/ai-safety-and-robustness

- O’Dea, M. (2024, February 20). AI bias in hiring: The ethics of algorithms that judge job applicants. World Economic Forum. https://www.weforum.org/agenda/2024/02/ai-bias-hiring-algorithms-ethics-jobs/

- O’Neil, C. (2016). Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Crown.

- Primotly. (n.d.). Exploring AI Ethics: Key Examples of Ethical Dilemmas in AI Today. Retrieved July 7, 2025, from https://primotly.com/article/examples-of-successful-ethical-ai-projects

- Time Magazine. (2025, April 25). 15 Quotes on the Future of AI. https://time.com/partner-article/7279245/15-quotes-on-the-future-of-ai/

- Zuse School RelAI. (n.d.). What even is differential privacy?. Retrieved July 7, 2025, from https://zuseschoolrelai.de/blog/what-even-is-differential-privacy-2/

Additional Reading List

- Responsible AI in Practice: Building Trustworthy AI Systems by David Danks and Alex John London. This book delves into the practical aspects of implementing ethical AI, covering topics like accountability, transparency, and fairness in various domains.

- AI Ethics by Mark Coeckelbergh. This is a great philosophical exploration of the ethical challenges posed by AI, inviting readers to think critically about the implications of advanced artificial intelligence.

- The Age of AI: And Our Human Future by Henry A. Kissinger, Eric Schmidt, and Daniel Huttenlocher. A broad perspective on AI’s impact on geopolitics, society, and human identity, offering insights from different fields.

- Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence by Kate Crawford. This book offers a critical look at the material and political costs of AI, from the minerals extracted to the human labor involved, highlighting the need for a more holistic ethical framework.

- Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil. A highly influential book exploring how algorithms can exacerbate social inequalities and the need for greater accountability.

Additional Resources List

- Google AI’s Responsible AI Practices: Google shares its principles and tools for developing AI responsibly. A good resource for understanding industry approaches. [Search for “Google AI Responsible AI”]

- IBM’s AI Ethics Principles: IBM has been a leader in establishing ethical guidelines for AI development and deployment. [Search for “IBM AI Ethics Principles”]

- Montreal Declaration for a Responsible Development of Artificial Intelligence: A comprehensive declaration outlining ethical principles for AI, developed through a participatory process involving experts and citizens. [Search for “Montreal Declaration for Responsible AI”]

- AI Now Institute: A research center dedicated to understanding the social implications of AI. They publish insightful reports and analyses on topics like bias, accountability, and labor. [Search for “AI Now Institute”]

- The Alan Turing Institute – Ethics and Responsible Innovation: The UK’s national institute for data science and artificial intelligence offers resources and research on ethical AI. [Search for “Alan Turing Institute AI Ethics”]