Uncover the thrilling origin of AI! From WWII tech to today’s smart systems, explore how visionary minds shaped our connected world. #AIInnovationsUnleashed

Ever had that moment when a movie character, seemingly out of nowhere, pulls out a piece of ancient tech that’s suddenly super relevant to their modern-day dilemma? Or maybe you’ve stumbled upon a dusty old book that, despite its age, perfectly explains some cutting-edge concept? That’s exactly how I feel about the Macy Conferences and the rise of Cybernetics.

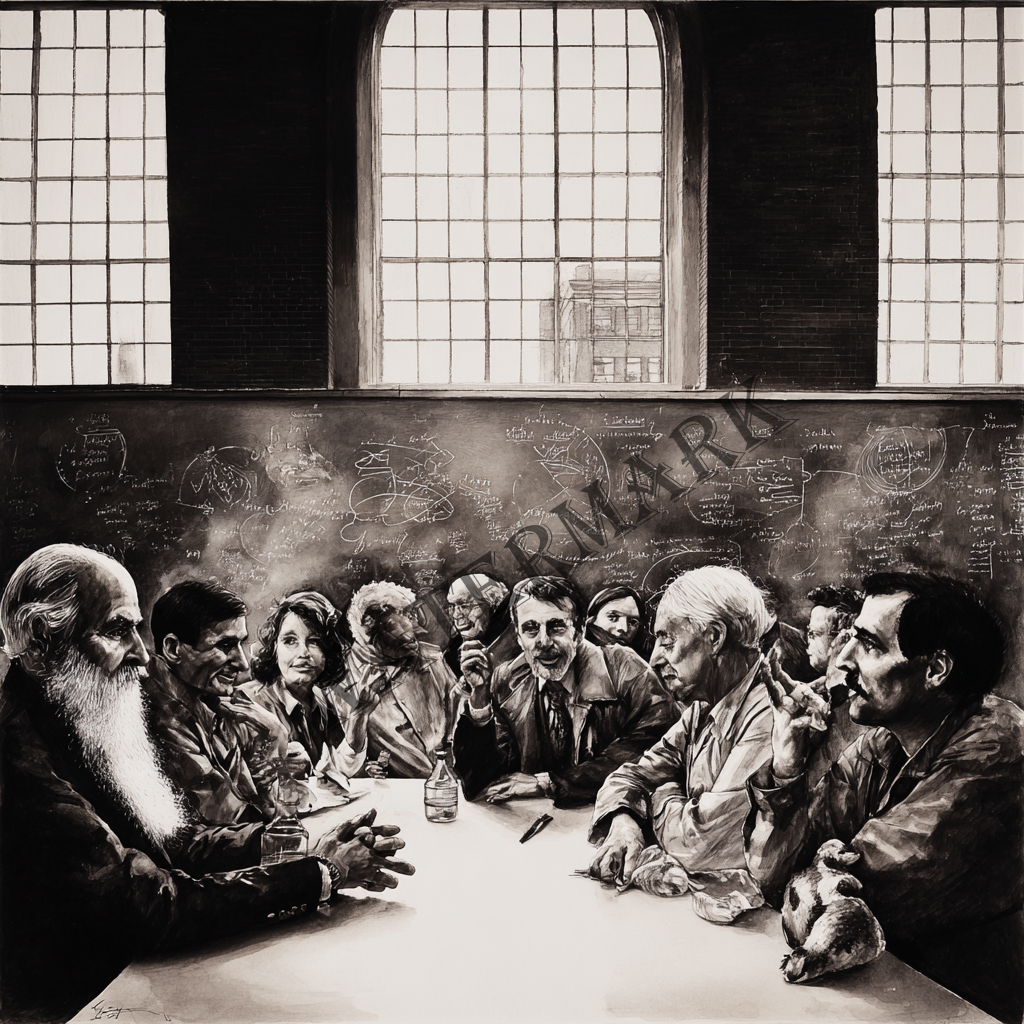

Imagine a smoke-filled room, not unlike a scene from a classic film noir, but instead of spies and femme fatales, you have brilliant scientists from every field imaginable – mathematicians, neurologists, anthropologists, engineers – all buzzing with post-World War II energy. They’re not talking about atomic bombs anymore. They’re talking about control. About communication. About how a thermostat, a human brain, and even a bustling city might all operate on similar, underlying principles. It sounds wild, right? A true intellectual jam session where the very notion of what intelligence is began to take shape.

This isn’t just a quaint historical anecdote; it’s the thrilling origin story of how we got to our current AI-saturated world. We’re talking about the fundamental ideas that laid the groundwork for everything from the neural networks powering ChatGPT to the self-driving cars navigating our streets. In this post, we’re going to dive into the surprisingly lighthearted yet profoundly impactful discussions that birthed cybernetics, explore the brilliant (and sometimes eccentric) minds who shaped it, and uncover why these decades-old debates still echo loudly in today’s headlines about AI ethics and the future of technology. Get ready for a fun ride back in time, because the “steersmen” of the past have a lot to tell us about where we’re headed.

The Dawn of a New Science: From Wartime Necessity to Universal Principles

Imagine the intellectual ferment immediately following World War II. While the world breathed a collective sigh of relief, the scientific community was already grappling with the profound lessons learned from the conflict, particularly regarding complex systems and prediction. The war had pushed the boundaries of technology and our understanding of control beyond anything previously conceived.

What specifically led to this groundbreaking shift? The pressing demands of World War II inadvertently became a massive, grim laboratory for innovation. One crucial area was anti-aircraft gunnery. Commanders needed to predict the future position of fast-moving enemy planes, a task far beyond human manual calculation. This challenge demanded systems that could process information, make rapid decisions, and adjust their output based on continuous feedback from the target’s movements. This is precisely where Norbert Wiener, the eventual “father of cybernetics,” made his pivotal contributions. His work on designing automatic aiming systems for anti-aircraft guns forced him to think deeply about how machines could mimic biological processes of prediction and self-correction. The underlying principle was a “feedback loop”: the system would observe the target, measure the difference between the observed and desired trajectory, and then adjust its own actions to minimize that difference (Wiener, 1948). This was a revolutionary way of thinking about machines, moving beyond simple input-output to dynamic, adaptive behavior.

Beyond weaponry, the war also highlighted the immense power and complexity of communication networks and logistical systems. Keeping supply lines open, coordinating vast military operations, and even managing the flow of information across continents all revealed the need for new frameworks to understand control and organization in dynamic, uncertain environments. The development of early computing machines, born from cryptanalysis efforts like Alan Turing’s work on the Enigma code, further demonstrated the potential for mechanical processes to handle complex information, hinting at artificial intelligence long before the term existed.

This wartime context made it clear that a new, interdisciplinary approach was desperately needed. Traditional scientific silos simply weren’t equipped to tackle these interwoven problems of control, communication, and complex system behavior. It was against this backdrop that the visionary Josiah Macy Jr. Foundation stepped in, recognizing the imperative to bring disparate brilliant minds together. From 1946 to 1953, they sponsored a series of ten interdisciplinary conferences, primarily held in New York, fostering dialogues between mathematicians, neurologists, anthropologists, psychologists, and engineers. The goal was ambitious: to forge a “universal language of information, feedback, and homeostasis” (Kline, 2020) that could apply across biological organisms, machines, and social structures. This movement, driven by wartime lessons and the desire for universal understanding, gave birth to Cybernetics.

The impact of this emerging field was immediate and profound because it offered a unifying theoretical framework. Previously, a thermostat, a nervous system, and a factory assembly line were seen as entirely separate domains. Cybernetics provided the conceptual glue, demonstrating that all these systems shared fundamental principles of self-regulation and information exchange. This wasn’t just about building better machines; it was about understanding the very essence of how intelligent, adaptive behavior emerges, whether in nature or in human-made artifacts. This universal applicability ensured its rapid adoption and influence across diverse fields, extending far beyond the initial wartime applications into peacetime science and engineering.

The Maverick Minds of Macy

The Macy Conferences weren’t just academic presentations; they were intense, often argumentative, dialogues. “Participants were deliberately chosen for their willingness to engage in interdisciplinary conversations, or for having formal training in multiple disciplines,” notes the Oxford Research Encyclopedia of Psychology (2020). This wasn’t about polite agreement; it was about smashing disciplinary silos and forging new ways of thinking.

Among the key players was Norbert Wiener himself, the aforementioned “father of cybernetics,” whose seminal 1948 book, Cybernetics: Or Control and Communication in the Animal and the Machine, solidified the field’s conceptual foundations. Then there were Warren McCulloch and Walter Pitts, whose groundbreaking 1943 paper, “A Logical Calculus of Ideas Immanent in Nervous Activity,” laid the theoretical groundwork for artificial neural networks. They demonstrated how simple logical operations could be performed by interconnected “neurons,” a concept that underpins much of modern AI (Dutta, 2024). Influential anthropologists Margaret Mead and Gregory Bateson brought invaluable perspectives on communication and systems within social contexts, challenging purely technical views of control. Their presence underscored the conferences’ commitment to holistic understanding, even if, as some scholars like Kline (2020) suggest, the dream of a complete anthropological cybernetics eventually “fizzled out.” And let’s not forget John von Neumann, a true polymath whose contributions to computing architecture and game theory were deeply intertwined with cybernetic principles. His insights into self-reproducing automata were particularly prescient.

These intellectual titans, often with vastly different vocabularies, grappled with fundamental questions: What is information? How do systems maintain stability (homeostasis)? How do they learn and adapt? These weren’t easy conversations, but they set the stage for everything that followed.

Philosophical Fireworks: Control vs. Understanding

Beyond the technical marvels, the Macy Conferences sparked profound philosophical debates that still resonate today. One central tension was the very purpose of cybernetics itself: was it about control or understanding?

Some saw cybernetics as a powerful tool for exerting control over complex systems, be they machines, organisms, or even societies. This perspective, while driving technological innovation, also raised concerns about potential manipulation or oversimplification of human experience. Others, like Bateson, championed cybernetics as a means to achieve deeper understanding of interconnectedness and patterns, emphasizing communication and feedback loops as pathways to wisdom, not just power. As a recent article in ResearchGate highlights, “This essay talks about the cybernetic method and how important it is to the growth of AI, especially when it comes to making AI systems that are flexible, moral, and focused on people” (ResearchGate, 2024). This ongoing tension between control and understanding is a core philosophical debate in AI today.

Consider the ongoing discussion around AI ethics. When we debate algorithmic bias or the potential for autonomous weapons, we’re echoing concerns first articulated (perhaps implicitly) by these early cyberneticists. As Dr. Stuart Russell, a leading AI academic from UC Berkeley, warns, “The relevant time scale for superhuman AI is less predictable, but of course that means it, like nuclear fission, might arrive considerably sooner than expected… We are already devoting huge scientific and technical resources to creating ever-more-capable AI systems, with very little thought devoted to what happens if we succeed” (Russell, as cited in Goodreads). This sentiment, a call for careful consideration of control and societal impact, has deep roots in the cybernetic tradition.

From Steersmen to Self-Driving Cars: The Enduring Legacy

Fast forward to 2025, and the principles hammered out in those smoke-filled conference rooms are everywhere.

The McCulloch-Pitts neuron model, a direct product of Macy-era thinking, is the conceptual ancestor of the artificial neural networks that power today’s deep learning revolution. From predictive analytics to generative AI, the idea of systems learning from data through feedback is fundamentally cybernetic. X-Analytics recently launched “X-Analytics ARIA AI as the world’s first Cyber Risk Thinking Machine” (AZoRobotics, 2025), a clear nod to systems that learn and adapt.

Self-regulating systems, a cornerstone of cybernetics, are essential for everything from industrial robots to self-driving cars. These machines constantly monitor their environment, compare it to a desired state, and adjust their actions through feedback loops – a direct application of Wiener’s “steersman” concept. “By leveraging feedback loops, sensors, and real-time data analytics, cybernetic principles are applied to optimize energy consumption,” notes Data Guardian Hub (2024), citing examples from smart lighting to intelligent HVAC systems.

Beyond just technology, cybernetics profoundly influenced the broader field of systems thinking. It encouraged us to see the world not as isolated parts, but as interconnected systems where actions have ripple effects. This holistic view is crucial for tackling complex global challenges, from climate change to public health.

“There’s no question we are in an AI and data revolution, which means that we’re in a customer revolution and a business revolution,” states Clara Shih of Salesforce AI, emphasizing the interconnectedness of data, AI, and business transformation (Shih, as cited in Deliberate Directions, 2024). This perfectly encapsulates the systems-level thinking that originated with cybernetics. Ginni Rometty, former IBM CEO, articulated a truly cybernetic vision when she said, “Some people call this artificial intelligence, but the reality is this technology will enhance us. So instead of artificial intelligence, I think we’ll augment our intelligence” (Rometty, as cited in Deliberate Directions, 2024). This speaks to the feedback loop between human and machine, where each continuously influences and improves the other.

Even in medical education, the Macy Foundation is still at it! A recent report highlights the “transformative opportunities in medical education” offered by AI, while also emphasizing the need to address “bias in AI algorithms, concerns about transparency, inadequate ethical guidelines, and risks of over-reliance” (Boscardin et al., 2025). This ongoing work, focused on ensuring AI is “human-factor” conscious, directly echoes the interdisciplinary and ethical concerns that permeated the original Macy Cybernetics Conferences.

The Steersman’s enduring lesson

The Macy Conferences and the rise of cybernetics remind us that technology isn’t born in a vacuum. It emerges from deep intellectual inquiry, passionate debate, and the brave crossing of disciplinary boundaries. The seemingly abstract ideas of feedback, control, and communication, forged in post-war intellectual salons, are the invisible threads woven into the fabric of our modern AI-powered world.

So, next time you marvel at a generative AI producing text, or a robot navigating a cluttered room, remember the “steersmen” of the Macy Conferences. They weren’t just building machines; they were laying the philosophical and scientific foundations for a future where humans and intelligent systems constantly interact, learn, and (hopefully) grow together. It’s a fun ride, indeed, with layers of meaning and history underneath every algorithm.

References

- Boscardin, C. K., Abdulnour, R.-E. E., & Gin, B. C. (2025). Macy Foundation Innovation Report Part I: Current Landscape of Artificial Intelligence in Medical Education. Academic Medicine. Advance online publication. https://pubmed.ncbi.nlm.nih.gov/40456178/

- Data Guardian Hub. (2024, May 22). The role of cybernetics in sustainable technology development. Retrieved July 14, 2025, from https://dataguardianhub.com/the-role-of-cybernetics-in-sustainable-technology-development/

- Deliberate Directions. (2024, October 30). 75 quotes about AI: Business, ethics & the future. Retrieved July 14, 2025, from https://deliberatedirections.com/quotes-about-artificial-intelligence/

- Dutta, S. (2024, June 2). The birth of artificial neurons: McCulloch and Pitts’ revolutionary model. Medium. https://medium.com/@sanjay_dutta/the-birth-of-artificial-neurons-mcculloch-and-pitts-revolutionary-model-bbebed538364

- Goodreads. (n.d.). Quotes about artificial intelligence. Retrieved July 14, 2025, from https://www.goodreads.com/quotes/search?page=50&q=artificial+intelligence

- Kline, R. (2020, January 19). Communication without control: Anthropology and alternative models of information at the Josiah Macy, Jr. Conferences in Cybernetics. HistAnthro. https://histanthro.org/notes/communication-without-control-macy-conferences/

- Oxford Research Encyclopedia of Psychology. (2020, April 30). Macy Conferences on Cybernetics: Reinstantiating the Mind. Retrieved July 14, 2025, from https://oxfordre.com/psychology/display/10.1093/acrefore/9780190236557.001.0001/acrefore-9780190236557-e-541

- ResearchGate. (2024, May 16). (PDF) The cybernetics perspective of AI. Retrieved July 14, 2025, from https://www.researchgate.net/publication/380070859_The_Cybernetics_Perspective_of_AI

- Wiener, N. (1948). Cybernetics: Or control and communication in the animal and the machine. MIT Press.

- X-Analytics. (2025, June 19). X-Analytics launches world’s first cyber risk thinking machine. AZoRobotics. https://www.azorobotics.com/news-article.aspx?newsID=11342

Additional Reading

- Ashby, W. R. (1956). An introduction to cybernetics. Chapman & Hall.

- Heims, S. J. (1993). The cybernetics group. MIT Press.

- Mead, M. (1968). Cybernetics of cybernetics. In H. Von Foerster, J. D. White, L. J. Peterson, & J. K. Ranney (Eds.), Purposive systems: Proceedings of the first annual symposium of the American Society for Cybernetics (pp. 1–11). Spartan Books.

- Mindell, D. A. (2002). Between human and machine: Feedback, control, and computing before cybernetics. Johns Hopkins University Press.

- Pickering, A. (2010). The cybernetic brain: Sketches of another future. University of Chicago Press.

Additional Resources

- The American Society for Cybernetics (ASC): A great resource for understanding contemporary cybernetics and its applications. [Search for “American Society for Cybernetics”]

- The Norbert Wiener Center for Harmonic Analysis and Applications (University of Maryland): Dedicated to the legacy of Norbert Wiener and ongoing research inspired by his work. [Search for “Norbert Wiener Center”]

- The Cybernetics Society: A UK-based organization promoting the understanding and application of cybernetics. [Search for “The Cybernetics Society”]