It starts with a voicemail. You hit play—and hear yourself speaking words you never said. The tone, the cadence, the pauses—it’s unmistakably your voice, but it’s been twisted into something strange. Maybe it’s endorsing a political candidate you don’t support, narrating a sketchy product ad, or worse—reaching out to a friend or loved one pretending to be you.

This isn’t an episode of Black Mirror or a far-future dystopia. It’s happening now.

In late 2024, a mother in Arizona received a terrifying phone call. On the other end was her teenage daughter, sobbing and pleading for help—only it wasn’t her daughter. It was an artificial intelligence (AI)-generated voice, cloned from social media videos, used in an attempted scam. “It was completely her voice,” the mother later told NBC News. “I never doubted for a second it was her.” (NBC News, 2024)

This story—and many like it—marks our uneasy arrival into the AI doppelgänger era.

In this blogpost, we’ll explore how artificial intelligence, especially technologies like voice cloning (software that can replicate a person’s voice) and deepfakes (AI-generated videos that can realistically mimic someone’s face and expressions), is making it possible to create eerily realistic digital copies of people. These aren’t just futuristic novelties. They’re raising questions that strike at the heart of our identity, our privacy, and even what it means to be human.

What happens when your digital twin starts acting on its own?

Who owns your voice—or your face—when anyone with the right tools can recreate it?

And perhaps most hauntingly: if a version of “you” can be endlessly replicated, what makes you… well, you?

Let’s dive in.

Early Days: The Seeds of Digital Cloning

The concept of replicating human features digitally isn’t entirely new. In the 1990s, researchers began experimenting with computer-generated imagery (CGI) to create realistic human images. These early endeavors laid the groundwork for more sophisticated techniques, even though the technology was in its infancy and the results were rudimentary.

Voice Cloning: From Robotic Utterances to Near-Perfect Mimicry

Voice synthesis has a rich history. Early text-to-speech systems produced robotic and monotonous outputs, far from natural human speech. However, with advancements in machine learning (a type of AI that enables computers to learn from data) and neural networks (algorithms designed to recognize patterns, mimicking the human brain’s interconnected neuron structure), voice cloning underwent a significant transformation.

By the mid-2010s, technologies like WaveNet, developed by DeepMind, utilized deep neural networks to generate speech that was remarkably human-like. This innovation opened doors for applications ranging from virtual assistants to aiding those who had lost their voices. Yet, it also raised concerns about potential misuse, such as creating unauthorized voice recordings.

Deepfakes: The Visual Counterpart

Parallel to voice cloning, the realm of visual media witnessed the rise of deepfakes. The term “deepfake” combines “deep learning” (a subset of machine learning focused on neural networks with many layers) and “fake.” It refers to AI-generated videos or images where one person’s likeness is seamlessly swapped with another’s, making it appear as though someone said or did something they didn’t.

The inception of deepfakes can be traced back to academic research in the 1990s, where efforts were made to animate human faces in videos. However, the phenomenon gained significant attention around 2017 when online communities began sharing tools that allowed users to create realistic face-swapped videos. Initially, these were often used to insert celebrities into scenes they had never participated in, raising immediate ethical and legal red flags.

Democratization and Accessibility

What was once the domain of skilled researchers with access to specialized equipment has now become accessible to the general public. Open-source software and user-friendly applications have democratized the creation of digital clones. Today, with just a computer and some training data (like photos or voice recordings), individuals can produce convincing digital replicas. This widespread accessibility amplifies both the potential applications and the risks associated with digital cloning.

The Double-Edged Sword of Innovation

The evolution of digital cloning technologies showcases human ingenuity and the rapid progression of AI capabilities. On one hand, they offer promising applications in entertainment, education, and assistive technologies. On the other, they present challenges related to consent, authenticity, and security. As we navigate this landscape, it’s crucial to balance innovation with ethical considerations, ensuring that these powerful tools are used responsibly.

Ethical and Legal Implications of AI Doppelgängers: In-Depth Analysis and Case Studies

The emergence of AI-generated digital clones, or doppelgängers, has revolutionized various sectors, offering innovative applications in entertainment, customer service, and personal assistance. However, this technological advancement brings forth significant ethical and legal challenges that warrant thorough examination.

Unauthorized Use and Consent Violations

One of the primary ethical concerns is the unauthorized use of an individual’s likeness, voice, or persona. Consent is foundational to personal autonomy, and its absence in the creation of AI doppelgängers can lead to profound ethical breaches.

Case Study: Voice Actors vs. LOVO

In May 2024, two voice actors filed a class-action lawsuit against Berkeley-based AI company LOVO. The actors alleged that LOVO used AI voiceover technology to replicate their voices without permission, violating their right to publicity and constituting false advertising. This case underscores the necessity for explicit consent in utilizing an individual’s voice for AI applications.

Privacy Infringements and Data Security

The creation of AI doppelgängers often involves the collection and processing of personal data, raising significant privacy concerns. Individuals have the right to control how their personal information is used, and unauthorized data usage can lead to legal ramifications.

Case Study: Violation of Privacy Rights

Unauthorized voice cloning without consent is a clear violation of privacy. Individuals have a right to control how their likeness, including their voice, is used. Unauthorized use of a cloned voice for commercial purposes, pranks, or to harm someone’s reputation can be grounds for a legal claim.

Fraud and Misinformation

AI doppelgängers can be exploited to perpetrate fraud and disseminate misinformation. The ability to create realistic digital replicas enables malicious actors to deceive individuals and organizations, leading to financial and reputational damage.

Case Study: CEO Fraud via Deepfake Audio

In 2019, a U.K.-based energy company fell victim to a sophisticated scam where fraudsters used AI-generated deepfake audio to impersonate the CEO’s voice. The scammers directed the company’s subsidiary to transfer $243,000 to a fraudulent account, highlighting the potential of AI in facilitating complex fraud schemes.

Legislative Responses and Regulatory Challenges

The rapid advancement of AI technologies has outpaced existing legal frameworks, necessitating new legislation to address emerging challenges.

Case Study: Tennessee’s ELVIS Act

In March 2024, Tennessee enacted the Ensuring Likeness Voice and Image Security (ELVIS) Act, aimed at protecting artists from the unauthorized use of their voices through AI technologies. This legislation represents a proactive approach to safeguarding personal rights in the digital age.

Intellectual Property and Ownership

Determining the ownership of AI-generated content poses complex legal questions. When an AI doppelgänger is created, issues arise regarding who holds the rights to the digital likeness and how it can be used.

Case Study: AI Voice Cloning and Copyright Laws

The use of AI to replicate voices raises concerns about consent and ownership. A notable instance involved the posthumous reproduction of celebrity chef Anthony Bourdain’s voice in a documentary without explicit consent from his estate, sparking debates about the ethical use of AI in media production.

Psychological and Societal Impacts

Beyond legal and financial implications, AI doppelgängers can have profound psychological effects on individuals and societal trust.

Case Study: Digital Doppelgängers and Identity

The creation of pre-mortem AI clones, or digital doppelgängers, raises concerns about identity fragmentation and the erosion of personal authenticity. Individuals may experience psychological distress upon encountering AI replicas of themselves, leading to broader societal implications.

Wrap Up

The advent of AI doppelgängers presents a dual-edged sword, offering innovative possibilities while posing significant ethical and legal challenges. Addressing these issues requires a collaborative effort among technologists, legal experts, ethicists, and policymakers to develop frameworks that protect individual rights and maintain societal trust in the age of AI.

Philosophical Considerations: When a Clone Becomes a “You”

Let’s take a step back from the headlines and lawsuits for a moment.

The rise of AI-generated doppelgängers doesn’t just affect business, security, or law—it nudges us into deep philosophical waters. Questions we once left to sci-fi movies and late-night debates are now knocking at our door, very much real, and very much relevant.

If technology can imitate your face, your voice, even your personality—

What does that mean for your identity?

Where do we draw the line between imitation and essence?

Let’s explore both sides of this emerging debate, and you can decide where you stand.

?️ Argument One: AI Clones Are Just Tools—Helpful, Impressive, but Harmless

This perspective treats AI clones as digital puppets—impressive, yes, but ultimately under human control. Like any creative or productivity tool, they’re only as good—or as dangerous—as the people who use them.

“We’re not replicating people. We’re representing them,” says Dr. Maya Leung, a professor of Digital Media and Ethics. “The difference is everything.”

Here’s why this argument resonates for many:

- AI clones are not sentient (which means they don’t have thoughts or feelings). They mimic behavior, not consciousness.

- If someone consents to their likeness being used, where’s the harm?

- These tools can be empowering—like preserving the voice of someone who lost speech due to illness, or enabling a favorite actor to “perform” even in retirement.

? Think of James Earl Jones, who gave Disney permission to use AI to clone his voice for Darth Vader—an iconic sound preserved, with dignity and control.

In this view, the focus isn’t on identity being stolen, but extended—augmented, even. So long as ethical safeguards are in place, it’s just another form of digital storytelling.

? Argument Two: Clones Blur Boundaries We Still Don’t Understand

But not everyone’s convinced this is harmless innovation.

This side of the debate argues that AI doppelgängers—particularly when used without consent or in emotionally sensitive contexts—raise serious concerns about the erosion of authenticity.

“You don’t need a soul to influence people,” notes Prof. Alain Richards, who researches media trust at Cambridge. “You only need a convincing performance.”

Here’s the crux of this viewpoint:

- When an AI clone walks, talks, and behaves like you, it may be treated as you by others. That perception alone can have real consequences.

- Even with disclaimers, deepfake content or voice simulations can spread misinformation or manipulate opinions before the truth ever catches up.

- And if your likeness can be used posthumously—without your consent—what happens to the concept of legacy or personal agency?

? Case in point: In the documentary “Roadrunner,” AI was used to re-create Anthony Bourdain’s voice reading private emails. Some viewers found it poignant; others felt it crossed a line—raising questions about posthumous consent.

⚓ Anchoring the Debate: The Ship of Theseus in a Digital Sea

Let’s dip briefly into classic philosophy.

The Ship of Theseus thought experiment asks: if you replace a ship’s parts one by one, until none of the original pieces remain, is it still the same ship?

Now update the metaphor: If we clone your voice, your writing style, your gestures—piece by piece—at what point does it stop being “you”?

This is no longer just theoretical. AI now allows people to train digital “ghosts” of themselves—clones that can answer questions, hold conversations, even offer life advice based on their past behavior.

Is this a meaningful digital legacy—or a version of self that can be hijacked, exploited, or misunderstood?

? The Comfort and Uncanny of Digital You

Some see AI clones as a form of digital continuity. Imagine leaving behind a version of yourself for your grandchildren to chat with. Is that eerie—or meaningful?

Others worry that this opens the door to emotional confusion. What happens when people grieve a clone? Or form connections with someone who isn’t real?

And in a world already swimming in misinformation, does a sea of realistic but artificial “selves” make it harder to trust anything?

These are serious questions. But they’re also open ones.

✨ Where Do You Stand?

Ultimately, AI doppelgängers exist in that uniquely modern space: part technological marvel, part ethical puzzle, part existential head-scratcher.

So here’s the invitation:

You don’t need to be a philosopher to weigh in. You just need to be human.

- If someone cloned you perfectly—voice, tone, style—would that feel like flattery or violation?

- Should your “digital double” be allowed to live on after you’re gone?

- Would you trust a message from a loved one’s AI, if it sounded and felt like them?

Drop your thoughts in the comments. Let’s explore this digital frontier together.

Voices from the Field: Industry Leaders and Academics Weigh In

The emergence of AI doppelgängers has sparked a spectrum of opinions among experts, reflecting the complexity and nuance of this technological evolution. Let’s delve into the perspectives of leading professionals and scholars who are navigating the promises and perils of AI-generated digital replicas.

Advocates: Harnessing AI for Innovation and Empowerment

Artavazd Yeritsyan, CEO and founder of Podcastle, envisions AI voice cloning as a transformative tool for content creation. He emphasizes that AI can democratize media production, making it more accessible and efficient. “We are changing the way audio and video content is created, making it a lot easier for creators and teams by natively integrating AI technologies,” Yeritsyan explains. His platform incorporates ethical safeguards to prevent misuse, underscoring the potential of AI to enhance creativity when implemented responsibly.

In the fashion industry, companies like Maison Meta advocate for the use of AI-generated digital models, suggesting that “digital twins” could grant human models greater control over their likenesses and open new revenue streams. They argue that, with proper consent and regulation, AI can offer innovative avenues for models to engage with brands and audiences.

Cautionary Voices: Highlighting Ethical and Security Concerns

Conversely, experts like Dr. Dominic Lees, an AI and film specialist, express reservations about the emotional authenticity of AI-generated voices. He points out that while AI can replicate speech patterns, it lacks the nuanced understanding of human emotion, which is crucial for genuine communication. “The big problem is that AI doesn’t understand emotion and how that changes how a word or a phrase might have emotional impact,” Lees notes, highlighting the limitations of AI in capturing the depth of human expression.

Security professionals also raise alarms about the potential for AI voice cloning to facilitate sophisticated scams. Erolin, a cybersecurity expert, warns, “AI voice cloning is not a futuristic risk. It’s a risk that’s here today.” He advocates for robust authentication measures to mitigate the threats posed by realistic voice impersonations, emphasizing the immediate need for enhanced security protocols.

The Call for Regulation and Ethical Frameworks

The rapid advancement of AI technologies has outpaced existing legal frameworks, prompting calls for comprehensive regulation. A report by Consumer Reports examines popular voice cloning products and finds that several fail to implement basic measures to prevent unauthorized use. The report underscores the necessity for industry-wide standards and ethical guidelines to safeguard against potential abuses.

Sam Altman, CEO and co-founder of OpenAI, acknowledges the dual-edged nature of AI advancements. While recognizing the potential for bias and discrimination, he also believes in AI’s capacity to positively impact society and create new economic opportunities. Altman emphasizes the importance of ensuring that artificial general intelligence benefits all of humanity, advocating for a balanced approach to AI development.

Academic Insights: Exploring Societal Implications

Academics are actively exploring the broader societal impacts of AI doppelgängers. A study published on Qeios examines the ethical and societal implications of pre-mortem AI clones, highlighting concerns about identity fragmentation and the erosion of personal authenticity. The paper calls for responsible AI governance that balances technological innovation with ethical considerations.

Similarly, research from Carnegie Mellon University indicates a public desire for governmental regulation of deepfake technologies. Participants expressed concerns about the potential misuse of deepfakes and advocated for legal measures to prevent their harmful applications, reflecting a societal push for oversight in the realm of AI-generated content.

Bridging Perspectives: Towards a Balanced Future

The discourse surrounding AI doppelgängers is marked by a tension between innovation and caution. While some industry leaders champion the creative and economic potentials of AI, others highlight the urgent need for ethical guidelines and robust security measures. This dynamic conversation underscores the importance of collaborative efforts among technologists, policymakers, ethicists, and the public to navigate the complexities of AI-generated digital identities.

As we stand at this technological crossroads, the collective insights of these professionals and academics serve as a compass, guiding us toward a future where AI’s capabilities are harnessed responsibly, ethically, and for the benefit of all.

Navigating the Future: Where AI Doppelgängers Are Going Next

The trajectory of AI doppelgängers is no longer a straight line of innovation—it’s more like a branching river, flowing toward multiple futures at once. Some of these futures feel inspiring, others… a bit disquieting. But all of them are plausible.

So where are we headed?

The Next Frontier: Hyperpersonalization and Digital Legacy

One of the clearest trends in AI cloning is toward hyperpersonalization. Imagine digital assistants that sound like your best friend, teachers that speak in your parent’s familiar tone, or marketing videos where the spokesperson is… you. These applications are already emerging. Companies are offering “personal AI” models trained on your own data, meant to answer emails, schedule appointments, or even carry on conversations when you’re unavailable.

Then there’s digital legacy—the concept of creating an AI version of yourself to live on after death. Whether for memoir-style storytelling, intergenerational bonding, or even grief support, more people are exploring AI as a way to extend a person’s presence beyond the limits of life.

But the question remains: At what cost?

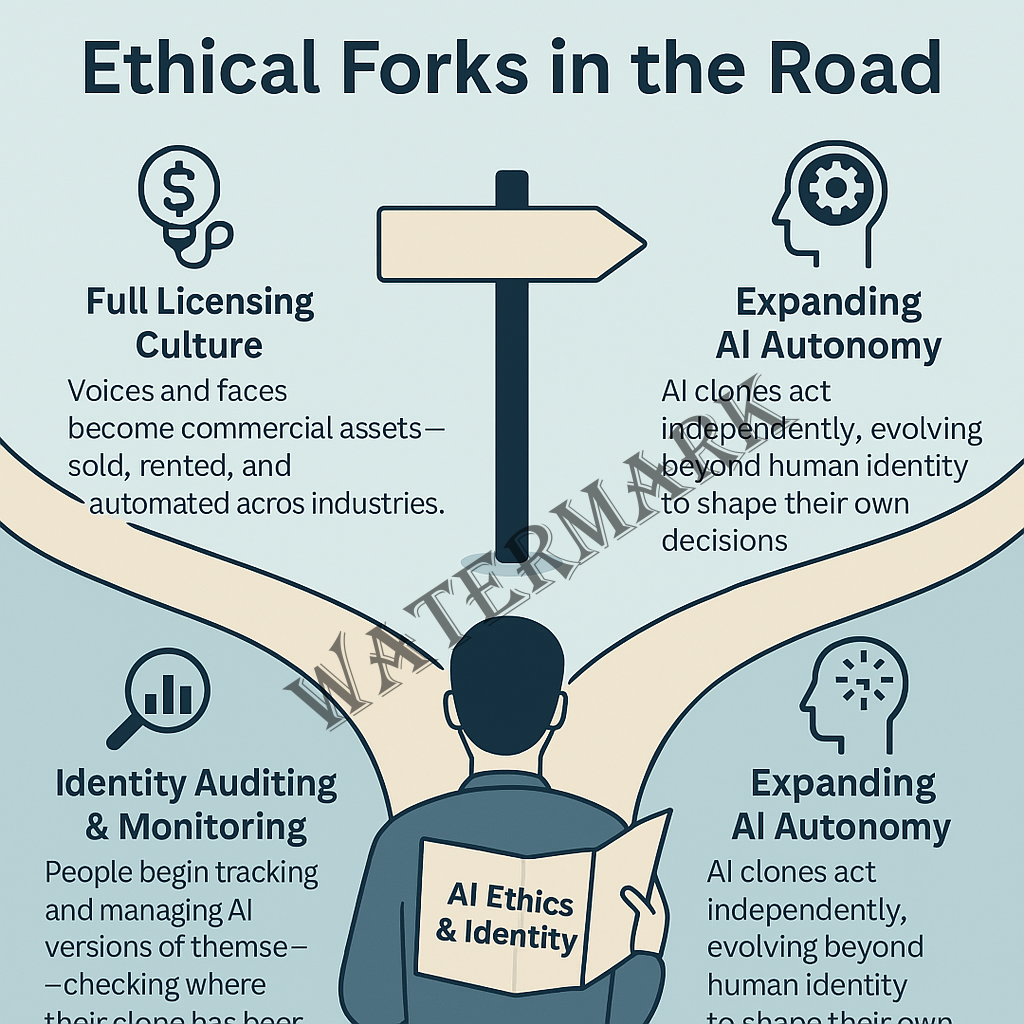

Ethical Forks in the Road

As this technology grows more sophisticated, we’ll face tough decisions:

- Should people be able to license their voices or faces to hundreds of brands at once—essentially becoming digital influencers who never sleep?

- Will “identity management” evolve to include AI clone audits, where you monitor the behavior of your replicas in digital spaces?

- And what happens when AI models learn enough about your behavior to act like you—even better than you think you would?

These aren’t just technological milestones. They are existential checkpoints.

Guardrails or Freefall?

Much like the internet before, AI cloning technology is racing ahead of regulation. Lawmakers worldwide are trying to keep up—some proactively, like California’s digital likeness legislation and Tennessee’s ELVIS Act, others reactively, after damage has already been done.

The big unknown is whether we’ll build guardrails in time—ethical, legal, and cultural norms that can steer this innovation toward the public good rather than manipulation or exploitation.

But this is also where culture steps in. Our shared expectations of what is respectful, what is “real,” and what deserves protection will shape how this technology is used. Because in the end, how we use AI is always a reflection of who we are.

? Join the Conversation: Your Voice Matters (Literally)

As AI blurs the line between real and replicated, we’re all stakeholders in what comes next. Whether you’re a technologist, artist, policymaker, or just a curious observer—your perspective helps shape how society responds to these rapidly evolving tools.

- Should your voice be your own forever—or licensed like a song?

- Would you want a digital version of yourself to speak for you… after you’re gone?

- Where should we draw the line between innovation and intrusion?

? Drop your thoughts in the comments below.

? Share this post with friends, colleagues, or your favorite futurist.

This conversation doesn’t end here—and frankly, it shouldn’t. Let’s build a future we can trust, together.

Final Thoughts: Whose Voice Is It, Really?

We live in a time where your image, your voice, even your personality can be re-created—sometimes with your blessing, sometimes without. In this digital hall of mirrors, we may be forced to confront one of the most human questions of all:

What part of “you” can never be copied?

Is it your memories? Your intentions? Your ability to change your mind, to feel remorse, to forgive, to dream?

AI may someday recreate our tone, cadence, and quirks with perfect precision. But so far, it still stumbles at the essence of what makes us human: our context, our contradictions, and our capacity to mean something more than just the sum of our words.

Whether you’re excited by the creative possibilities of cloning or unsettled by its philosophical echoes, one thing is certain: AI doppelgängers have arrived. And we’ll need to decide—collectively and individually—how we want to live alongside them.

In a world full of voices, the most important one might still be your own.

? References (APA Style)

- Findlay, G. (2025, January 7). ‘You’re gonna find this creepy’: My AI-cloned voice was used by the far right. Could I stop it? The Guardian. https://www.theguardian.com/commentisfree/2025/jan/07/ai-clone-voice-far-right-fake-audio

- Lees, D. (2024, November 19). AI cloning of celebrity voices outpacing the law, experts warn. The Guardian. https://www.theguardian.com/technology/2024/nov/19/ai-cloning-of-celebrity-voices-outpacing-the-law-experts-warn

- The Times. (2024, July 15). Deepfake fraudsters impersonate FTSE chief executives. The Times. https://www.thetimes.co.uk/article/deepfake-fraudsters-impersonate-ftse-chief-executives-z9vvnz93l

- The Verge. (2024, September 17). California governor signs rules limiting AI actor clones. The Verge. https://www.theverge.com/2024/9/17/24247583/california-governor-newsom-signs-ai-digital-replica-bills

- Holland & Knight LLP. (2024, April 4). First-of-its-kind AI law addresses deepfakes and voice clones. https://www.hklaw.com/en/insights/publications/2024/04/first-of-its-kind-ai-law-addresses-deep-fakes-and-voice-clones

- Consumer Reports. (2024, March). Do AI voice cloning companies do enough to prevent misuse? https://innovation.consumerreports.org/new-report-do-these-6-ai-voice-cloning-companies-do-enough-to-prevent-misuse

- LGT Law. (2024, May). Class action filed over AI-generated voice cloning. https://www.lgt-law.com/blog/2024/05/class-action-filed-over-ai-generated-voice-cloning

- Trend Micro. (2019). CEO fraud via deepfake audio steals $243,000 from UK firm. https://www.trendmicro.com/vinfo/us/security/news/cyber-attacks/unusual-ceo-fraud-via-deepfake-audio-steals-us-243-000-from-u-k-company

? Additional Resources

- HereAfter AI – A company offering personal legacy voice AI: https://www.hereafter.ai

- AI Voice Cloning and the Law – National Security Law Firm: https://www.nationalsecuritylawfirm.com/understanding-voice-cloning-the-laws-and-your-rights

- Consumer Reports Innovation Lab – Responsible AI reviews: https://innovation.consumerreports.org/

- MIT Technology Review – News and insights on emerging AI trends: https://www.technologyreview.com

- CMU Ideas Lab (2024) – Research on deepfakes and public perception: https://www.cmu.edu/ideas-social-cybersecurity

? Recommended Readings

- Methuku, V., & Myakala, P. K. (2025). Digital doppelgängers: Ethical and societal implications of pre-mortem AI clones. arXiv. https://arxiv.org/abs/2502.21248

- Terziyan, V., & Gavriushenko, M. (2024). Cloning and training collective intelligence with generative adversarial networks. IET Research. https://ietresearch.onlinelibrary.wiley.com/doi/full/10.1049/cim2.12008

- Qeios (2024). AI Clones and the Fragmentation of Identity. https://www.qeios.com/read/9X78NO

- Richards, A. (2023). Synthetic Identity in the Age of AI. Cambridge Digital Ethics Papers.

- Metz, C. (2023). AI: The Problem Isn’t the Tool, It’s the Mirror. The New York Times Opinion. https://www.nytimes.com