What is Prompt Engineering?

Before we dive into prompt engineering as a technical skill, let’s look at what it is and how it came to be.

Prompt engineering refers to the process of designing and refining inputs (called prompts) given to large language models (LLMs) to achieve the most accurate, useful, and contextually relevant outputs. It’s a critical interface between human intent and machine-generated responses, and it exists because even the most advanced AI models are, at their core, pattern-matching engines that respond based on the prompts they receive.

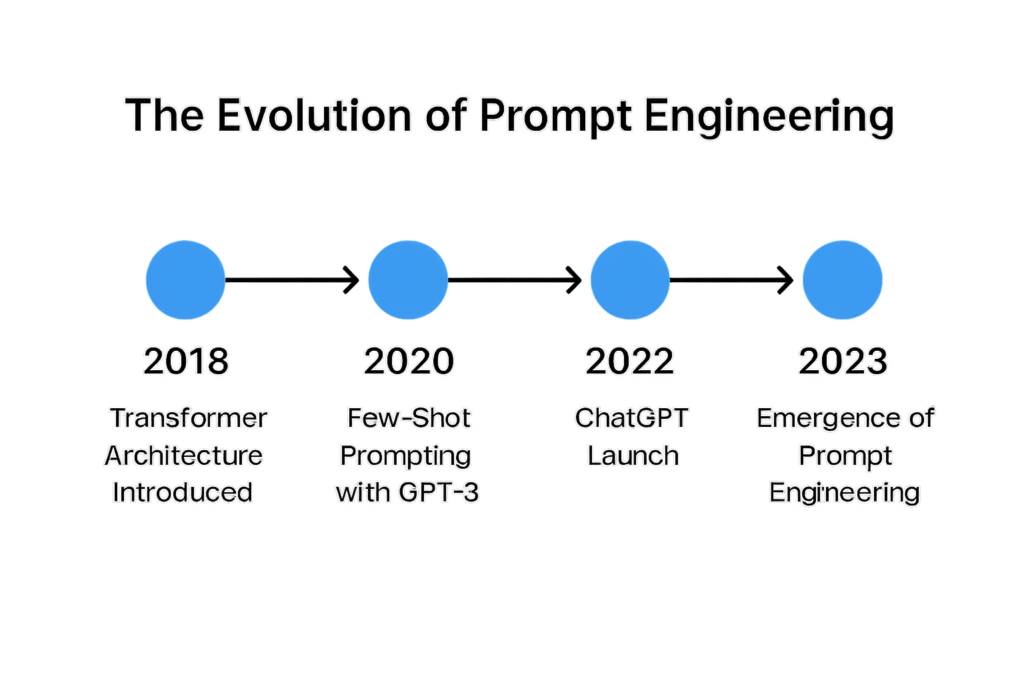

The term started gaining traction around 2020, in tandem with the rise of models like GPT-3. As developers and early adopters began experimenting with these models, they realized that the way you ask the AI something can drastically affect what you get back. What initially started as a niche concern for researchers and hobbyists quickly evolved into a core component of AI literacy for businesses, marketers, educators, and even legal professionals.

In the early days, people would enter simple commands into AI interfaces, such as “Summarize this article” or “Write a poem.” However, results were often inconsistent. The realization that structured and thoughtful prompts could “shape” the AI’s behavior led to the emergence of prompt engineering as a formal practice. Today, it’s a vital skill that blends aspects of psychology, linguistics, logic, and software development.

“Prompt engineering is quickly becoming the most essential skill in the age of AI. It’s like programming, but in natural language.” — Andrej Karpathy, Founding Member of OpenAI

As generative AI tools such as ChatGPT, Claude, and Gemini become more ingrained in business operations, education, and content creation, understanding how to communicate effectively with these models is paramount.

At its core, prompt engineering is about crafting precise and strategic inputs (prompts) that guide AI models to generate desired outputs. Unlike traditional software programming where instructions are given in code, prompt engineering involves giving clear instructions in natural language that align with the model’s training and logic.

Why Prompt Engineering Matters

Prompt engineering isn’t just a buzzword—it’s a transformative layer of interaction between humans and machines that determines the usability, effectiveness, and safety of AI systems. While large language models (LLMs) like ChatGPT, Claude, and Bard are immensely powerful, their responses are highly sensitive to the phrasing, structure, and clarity of the prompts they receive. This means that the same model can give dramatically different outputs depending on how a question is framed.

What Prompt Engineering Impacts:

- Content Quality: Refined prompts yield deeper, more nuanced, and actionable content.

- Business Efficiency: Reduces time spent editing or rerunning tasks, especially in marketing, support, and documentation.

- Data Interpretation: Better prompts lead to clearer, more reliable summaries and insights from complex datasets.

- Customer Experience: In chatbots and support systems, prompt clarity ensures users get faster, more accurate help.

- Bias and Safety: Thoughtful prompts can reduce the chances of biased or harmful outputs.

Success Stories:

- Legal Tech: A legal firm used prompt engineering to generate tailored contract summaries from complex legal texts. By assigning the AI a “role” (legal assistant) and guiding it to highlight key clauses, they cut down review time by 60%.

- Education: An ed-tech startup trained instructors on prompt engineering, helping them craft AI-generated lesson plans that followed curriculum standards. This increased content approval rates by over 40%.

- Marketing: A digital marketing agency used layered prompts to generate SEO blog posts with embedded keyword strategies. Compared to baseline AI generation, engagement went up by 25%.

When Prompting Goes Wrong:

- Generic Outputs: A finance team used AI to create investor reports but without constraints. The outputs lacked specifics, included outdated examples, and failed to impress stakeholders.

- Bias Amplification: Poorly constructed prompts in a healthcare app led to biased responses in medical risk assessments until restructured to include neutral language and context framing.

Use Case Example (Expanded):

Let’s break down what happens in a practical setting.

Scenario: A marketing manager wants to generate a blog post to promote a SaaS product aimed at startup founders.

Prompt 1 (Vague): “Write a blog about marketing.”

- Result: The AI generates a generic post about marketing basics—no specific audience, unclear tone, and lacking strategic depth.

Prompt 2 (Refined with Prompt Engineering): “You are a SaaS marketing expert. Write a 700-word blog post on SaaS marketing strategies tailored for early-stage startup founders. Use SEO-optimized language, include relevant keywords, structure it with H2 headers and bullet points, and end with a persuasive call-to-action. Maintain a professional yet friendly tone.”

- Result: The AI delivers a structured, SEO-ready blog post with clear value, actionable tips, and the appropriate tone and complexity for the target audience. The result is nearly publish-ready with minimal editing.

By breaking down the task and guiding the model through each requirement, the user transforms a vague goal into a precision-crafted outcome. This saves time, ensures consistency, and unlocks the full value of AI assistance.

As AI continues to disrupt nearly every sector, from healthcare to marketing to software development, the importance of high-quality AI output has never been higher. And that output starts with a prompt.

Key Benefits of Prompt Engineering:

Prompt engineering offers tangible benefits that affect both the quality of AI output and the efficiency of human-machine collaboration. These benefits become especially critical in high-stakes environments where precision, consistency, and context are non-negotiable.

- Increased Accuracy and Relevance: Well-structured prompts help guide the AI to stay focused on the topic and respond with contextually appropriate and accurate information. For example, asking an AI to “explain quantum computing to a high school student” is far more effective than simply saying “explain quantum computing.”

- Time Efficiency: A clear and intentional prompt reduces the need for multiple iterations or extensive post-editing. In a business context, this means teams can generate client-ready documents, reports, or content much faster.

- Customization and Control: Prompt engineering allows for fine-tuned control over output tone, style, voice, and complexity. Whether you’re generating social media captions, legal memos, or academic abstracts, you can tailor responses to suit a specific audience or brand identity.

- Alignment with Business Objectives: Prompting can be used strategically to align AI-generated content with larger goals such as SEO, sales funnel positioning, educational standards, or compliance requirements. It ensures that outputs are not just coherent, but actionable and on-message.

- Scalability of Output: When prompts are structured as templates or reusable components, organizations can scale high-quality content production across different use cases and departments—without reinventing the wheel each time.

- Risk Mitigation: Thoughtful prompt construction can help prevent undesirable or biased outputs by embedding safety language, neutral tone directives, and ethical context cues directly into the prompt.

- Increased accuracy and relevance of AI-generated content

- Time efficiency by reducing the number of iterations required

- Customization of tone, voice, and depth based on needs

- Better alignment with business objectives and user expectations

Many people assume that the AI “just knows” what to do. However, even the most powerful large language models (LLMs) operate by predicting the next most likely word based on the data they’ve been trained on. They don’t “understand” in a human sense. Therefore, the quality of the prompt determines the quality of the outcome.

Tips and Tricks: Getting Better Results

If you want better responses from your AI tool, understanding how prompt engineering works in real-world applications is key. These aren’t just abstract suggestions—they’re strategies being used every day in industries ranging from tech to education to healthcare.

Real-World Prompting Tactics That Work:

- Be Specific: Vague instructions lead to generic results. A social media team at a fashion brand found that prompting with “Write a tweet about our new product” yielded bland results. But specifying “Write a 240-character tweet announcing the release of our eco-friendly sneakers, using an upbeat and humorous tone” produced copy that significantly boosted engagement.

- Set the Tone: Clarifying the mood and audience helps AI strike the right balance. In legal contexts, for instance, asking AI to “summarize this court ruling in plain English for non-lawyers” made documents significantly more digestible for clients.

- Break Down Tasks: Avoid overwhelming the model with multiple requests at once. A startup founder who needed investor materials split their prompt into sections: company overview, problem, solution, traction, and team—leading to a cleaner pitch deck with better flow.

- Use Bullet Points: Bullet formatting encourages clarity. Educational platforms use this to help AI generate flashcards or study guides that students can quickly absorb.

- Iterate Often: Think of prompting like tuning an instrument. What works once may need tweaking later. An HR manager refined a job description prompt five times to get the perfect balance of inclusivity, clarity, and compliance.

Example of Prompt Evolution:

Initial Prompt: “Give me advice on launching a product.”

Refined Prompt: “You are a product launch consultant. Outline the top 5 steps for launching a mobile app in the fintech sector. Include realistic timelines, a marketing rollout plan, and key metrics for success. Use bullet points and keep it under 400 words.”

Result: The first prompt led to a basic blog-style answer that was too general. The refined version generated a professional-grade checklist that the team incorporated into their official launch strategy.

These refinements can be the difference between busywork and breakthrough. By focusing on clarity, structure, and experimentation, you can unlock a much deeper level of collaboration with AI.

Pro Tip:

Create a “Prompt Library” within your team or organization. Document successful prompts for specific use cases—content creation, data summaries, customer emails, etc.—so others can reuse and adapt them. This not only builds internal consistency but also speeds up onboarding and experimentation.

Prompt 1: “Write a blog about marketing.”

Prompt 2: “Write a 700-word blog post on SaaS marketing strategies for early-stage startups. Include SEO keywords, bullet points, and a call-to-action. Use a professional yet friendly tone.”

Result: The second prompt is dramatically more effective, targeted, and useful—saving time and improving clarity.

The Mechanics of Prompt Engineering

Prompt engineering isn’t just about saying more—it’s about saying the right things in the right way. The mechanics of prompt engineering are what elevate this skill from simple question-asking to a form of strategic communication with a highly advanced system. Getting the mechanics right is important because every element of a prompt influences how the model interprets intent, context, tone, and desired output structure.

Large language models are probabilistic text generators. They do not possess true understanding or intent but rely on massive training data to predict and generate human-like responses. Because of this, ambiguity in prompts can lead to generic, off-topic, or even misleading responses. But when you break down the task into discrete elements and guide the AI using proven techniques, the output becomes more focused, usable, and intelligent.

Effective prompt engineering involves blending psychology (understanding how people think), linguistics (how language is structured), and system logic (how AI models respond to stimuli). When done correctly, it allows humans to interface with AI in ways that amplify productivity and creativity.

Here are several foundational techniques that dramatically improve AI performance. Each serves a specific purpose in clarifying the user’s intent and aligning it with the model’s capabilities:

Prompt engineering isn’t just about saying more—it’s about saying the right things in the right way. Here are several foundational techniques that can dramatically improve AI performance:

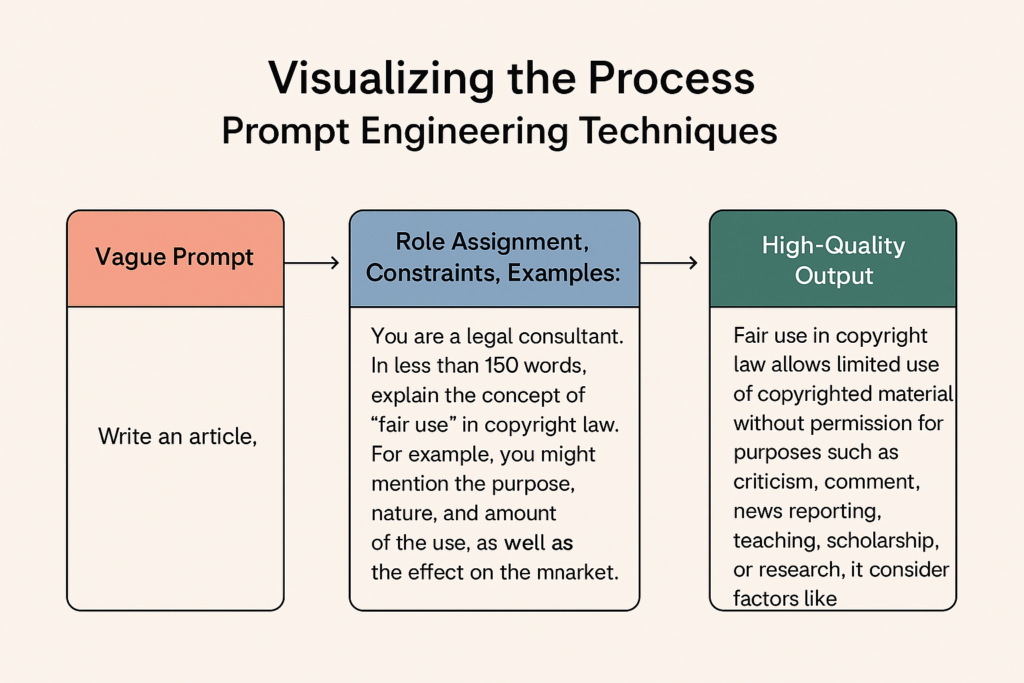

1. Role Assignment

Tell the AI who it is to better guide its response. This helps the model frame its answers within a relevant context and tone, improving domain-specific accuracy.

“You are a legal consultant specializing in intellectual property law.”

By assigning a role, you’re providing the model with a filter through which to generate responses. This is especially useful in professional or technical domains like medicine, law, or software engineering.

“You are a legal consultant specializing in intellectual property law.”

2. Constraint Setting

Set specific parameters for output. Constraints like word count, format, language style, or even emotional tone help ensure the AI produces content that fits within your operational or branding needs.

“Write this in under 200 words using bullet points.”

Constraints minimize editing and improve the usability of the generated content by keeping it on-task and context-aware.

“Write this in under 200 words using bullet points.”

3. Chain-of-Thought Prompting

Encourage the model to reason step-by-step. This technique improves logical coherence, especially for complex queries, calculations, or problem-solving tasks.

“First, list the issues. Then explain each with examples.”

It mirrors how humans solve problems and helps the AI follow a structured path, leading to more thorough and thoughtful responses.

“First, list the issues. Then explain each with examples.”

4. Few-Shot Learning

Provide examples for the model to mimic. By offering 1-3 examples, you show the model the format, tone, and content style you’re expecting.

“Here are two examples of good answers. Use a similar format.”

Few-shot learning allows you to teach the model what “good” looks like, helping it generalize better to your use case even without custom training.

“Here are two examples of good answers. Use a similar format.”

5. System-Level Instructions

Define behavior at a structural level (available in platforms like ChatGPT Pro). These instructions persist across interactions and can influence model behavior more globally.

e.g., “Always answer in the voice of a seasoned professor.”

These are particularly powerful for long-term projects or consistent voice and tone across a series of tasks or outputs. (available in platforms like ChatGPT Pro).

e.g., “Always answer in the voice of a seasoned professor.”

Visualizing the Process

What the Experts Say

Prompt engineering is being recognized as a foundational skill by thought leaders and practitioners across multiple industries. As AI becomes more integrated into our workflows, the voices shaping its use are emphasizing the growing importance of learning how to communicate effectively with these systems.

“Prompt engineering is how humans and AI co-author creativity. The better your prompt, the smarter the machine looks.” — Ethan Mollick, Wharton Professor and AI Educator

Mollick has been a strong advocate for integrating prompt engineering into business school curricula, seeing it as an essential literacy for future leaders.

“In the same way we taught spreadsheet modeling and email etiquette, we’re now teaching students how to write good prompts. It’s a new workplace language.” — Ajeya Cotra, AI Researcher at Open Philanthropy

Leading organizations, including Google, OpenAI, and Anthropic, now provide training and documentation aimed at improving prompt quality. The consensus is clear: the more refined your input, the more reliable, safe, and efficient your AI collaboration becomes.

Even policy makers are starting to pay attention. The ability to write responsible prompts is seen as a safeguard against misinformation, bias, and misuse of generative AI tools.

“Prompt engineering sits at the intersection of technical skill and ethical responsibility. Getting it right isn’t just a productivity booster—it’s essential to building trustworthy AI.” — Margaret Mitchell, Chief Ethics Scientist, Hugging Face

These insights make it clear that prompt engineering isn’t a temporary fad, but rather a long-term skillset that will define how we shape, manage, and govern human-AI interaction.

The Rise of Prompt Engineering Jobs

One of the most telling signs of the value of prompt engineering is the rapidly growing job market surrounding it. As generative AI tools have become increasingly integrated into day-to-day workflows, the demand for professionals who can effectively interface with these systems has skyrocketed.

In 2024, job listings for titles like “Prompt Engineer,” “AI Interaction Designer,” and “AI Content Strategist” surged across tech consultancies, startups, and even Fortune 500 companies. Roles that didn’t traditionally touch AI—such as instructional design, marketing operations, and product management—are now including prompt engineering responsibilities as core job functions.

“Prompt engineering is the UX of AI. It’s not about code—it’s about shaping behavior through language.” — Sahil Lavingia, CEO of Gumroad

This shift reflects a broader trend: the democratization of AI. As tools like ChatGPT and Claude become more accessible, it’s not just developers who interact with models—it’s marketers, lawyers, salespeople, and teachers. Learning how to write high-impact prompts is becoming as fundamental as learning Excel was in the early 2000s.

“The people who learn prompt engineering today will have an edge over the AI tools of tomorrow. It’s the new literacy of the workplace.” — Nat Friedman, former CEO of GitHub

Salaries in this field are catching attention too. OpenAI and Anthropic have publicly listed prompt engineering roles with compensation reaching $250K–$300K/year, reflecting the strategic value of this expertise. But beyond high-profile roles, everyday applications are making a difference: from crafting legal summaries and writing product documentation, to automating internal communications.

Why Learn Prompt Engineering Now vs. Later?

- AI Is Already Here: LLMs are no longer experimental—they’re operational. Businesses are adopting them faster than most professionals can adapt.

- Early Movers Win: Just like early SEO experts gained massive influence in the 2010s, early prompt engineers are establishing their thought leadership and career leverage now.

- Low Barrier, High Return: Unlike learning to code, prompt engineering doesn’t require technical expertise. Anyone can begin, iterate, and improve with practice.

- Future-Proofing Your Career: As AI changes how work gets done, understanding how to collaborate with it becomes crucial.

This isn’t a passing trend. Prompt engineering is becoming the connective tissue between humans and intelligent systems. Learning how to write for machines may soon be as important as learning how to write for people.

Tools and Frameworks Emerging Around Prompts

As prompt engineering becomes more central to how we work with AI, a rich ecosystem of tools and frameworks has started to emerge—designed to streamline, automate, and elevate prompt design. These tools are enabling both technical and non-technical users to develop consistent, reusable, and highly effective prompt workflows.

Popular Tools Enhancing Prompt Workflows:

- PromptBase: A marketplace where users can buy, sell, and test high-performing prompts for different use cases, from content writing to customer support.

- FlowGPT: A community platform offering shared prompt templates, categorized by industry, goal, and model compatibility.

- LangChain: An open-source framework for chaining prompts and model outputs into logic-based workflows. Commonly used by developers to build AI agents that require memory and reasoning.

- PromptLayer: A prompt engineering observability tool that tracks, logs, and evaluates the performance of different prompts. It’s used by teams deploying LLMs in production environments.

- Replit Ghostwriter / Notion AI / Jasper: Platforms embedding prompt patterns into user-friendly tools so users don’t need to see the raw prompt, but still benefit from engineered responses.

Why These Tools Matter:

These frameworks and platforms reduce friction in AI interaction. Instead of writing prompts from scratch each time, users can:

- Reuse templates with proven performance.

- A/B test multiple prompt versions.

- Fine-tune prompts for tone, format, or data accuracy.

- Build layered prompt chains for complex, multi-step outputs.

“Prompt engineering is becoming modular. The future isn’t just about writing great prompts—it’s about building systems that manage, adapt, and evolve them at scale.” — Harrison Chase, Creator of LangChain

PromptOps—operations frameworks centered on prompt lifecycle management—is also becoming a standard inside enterprise AI deployments. Just as DevOps revolutionized software engineering, PromptOps is positioning prompt engineering as a discipline with clear structure, tooling, and measurable outcomes.

Whether you’re a solo creator writing AI emails or a large company deploying chatbots across customer service, having the right prompt tools ensures better results, faster iteration, and more scalable success.

Call to Action: Start Prompting with Purpose

If you’ve made it this far, it’s clear you’re ready to elevate how you work with AI. Don’t wait for the future of work to find you—create it yourself. Whether you’re a founder, marketer, educator, developer, or just curious about AI, start building your prompt engineering skills today.

✅ Follow industry leaders and communities like FlowGPT, LangChain, and PromptBase. ✅ Practice by refining prompts in your daily workflows—emails, summaries, reports, and more.

The people shaping AI aren’t just building models—they’re designing the conversations. Start crafting yours.

Conclusion: Why Prompt Engineering Matters Now More Than Ever

Prompt engineering is more than a skill—it’s a new form of literacy. As large language models become embedded in everything from our browsers to our business strategies, the ability to communicate clearly, ethically, and effectively with these systems becomes a core professional advantage.

From saving time and improving clarity to scaling content creation and minimizing bias, the power of prompt engineering is already transforming the way we work and innovate. It bridges the gap between human intention and machine output—putting the creative direction back in your hands.

So whether you’re just getting started or refining your practice, know this: the next generation of impactful professionals won’t just know how to use AI—they’ll know how to talk to it.

? Recommended Reading:

- “The Art of Prompt Engineering with ChatGPT” – Nathan Hunter: A hands-on guide for beginners and professionals alike.

- “Building Systems with ChatGPT” – Riley Goodside & Isa Fulford (OpenAI): Great for developers looking to integrate prompt engineering into applications.

- “Language Models are Few-Shot Learners” – Brown et al. (2020): The original GPT-3 research paper introducing few-shot learning.

?️ Tools to Explore:

- PromptBase – Marketplace for buying and selling quality prompts.

- LangChain – Build prompt-based applications with logic and memory.

- FlowGPT – Discover and share high-performing prompts by use case.

- PromptLayer – Monitor, log, and evaluate prompt effectiveness over time.

? Communities and Newsletters:

- The Rundown AI – Stay updated on AI tools and trends.

- Prompt Engineering Daily (Substack) – Real-world prompt examples and industry insights.

- AI Exchange on Discord – A lively space to discuss prompt ideas, tools, and applications.

? Online Courses:

- OpenAI Learn: ChatGPT for Beginners

- DeepLearning.AI: ChatGPT Prompt Engineering for Developers